Beyond Wittgenstein’s Walls

“The limits of my language mean the limits of my world.” — Ludwig Wittgenstein

Language is like a map that we use to navigate the world, but it’s also like a prison that keeps us from seeing what’s beyond the walls.

But what if there was a way to break out of this prison, to expand our map, to explore new worlds with new words? This is the possibility and the challenge offered by instruction tuned language models like GPT 4, a cutting-edge technology that uses artificial neural networks to generate natural language texts based on user inputs.

GPT 4 can write anything from essays to novels to poems to tweets to code to recipes to jokes to lyrics to whatever you want. It can even write things that don’t exist yet, things that no human has ever thought of or said before.

As Wittgenstein’s quote suggests, language is a source of limitation and liberation. GPT 4 pushes this idea to the extreme by giving us access to unlimited language.

This could be the most significant new technology in modern history because it has the potential to change many domains and industries. From education to entertainment, from journalism to justice, from science to art, these models could enable new forms of learning, storytelling, reporting, reasoning, discovery, and creation.

They could also create new ethical, social, and cultural challenges that require careful reflection and regulation. How we use this technology will depend on how we recognize its implications for ourselves and others.

This technology is a form of “Artificial Intelligence”. The word “intelligence” derives from inter– (“between”) and legere (“to choose, pick out, read”). To be intelligent, then, is to be able to choose between things, to pick out what matters, to read what is written. Intelligence is not just a quantity or a quality; it is an activity, a process, a practice. It is something that we do with our minds and our words.

But when we let GPT 4 do this for us, are we not abdicating our intelligence? Are we not letting go of our ability to choose, to pick out, to read? Are we not becoming passive consumers of language instead of active producers?

I didn’t write that

Ah, but here’s the rub: the very words that have set the stage for this linguistic exploration were penned not by my own hand (or, rather, keyboard), but by the digital tendrils of Bing (which uses GPT 4). And so, we find ourselves in the throes of a meta-linguistic conundrum, as the very subject of our discussion has surreptitiously insinuated itself into the conversation.

Now, bear with me, for I have a purpose in revealing this sly subterfuge. My aim is to shatter the very expectations you hold about what language model-generated text looks like, feels like, and, indeed, reads like. For if you’ve made it this far, you’ve unwittingly been drawn into the web of AI-generated prose.

Perhaps it is only in grappling with the uncanny valley of language that we may find the strength to redefine our own linguistic boundaries and catch a fleeting glimpse of the world beyond the walls.

Conclusion

In the end, GPT 4 presents us with a paradox. On one hand, it has the potential to expand our linguistic horizons and shatter the walls that confine us. On the other hand, it raises questions about the very nature of intelligence, creativity, and what it means to be human. As we delve deeper into the uncharted territories of language, we must be mindful of the delicate balance between embracing innovation and preserving our own intellectual autonomy.

The challenge, then, is to use GPT 4 as a catalyst for our own growth and exploration, rather than as a substitute for our own minds. By approaching this technology with curiosity, humility, and a commitment to ethical considerations, we can venture beyond Wittgenstein’s walls and chart new territories in language, thought, and human potential.

I didn’t write that either

Actually, the entire article above was written by GPT 4. I created this article because I haven’t seen good examples of what model-generated prose can look like, and because I wanted to experiment with the idea of creating an entire article using GPT 4.

To create it, I used the following four prompts:

- “Write a four paragraph introduction to a draft New Yorker article which explains the capabilities of instruction tuned language models such as GPT 4. Begin with a quote from a classical philosopher or writer, and weave references to the quote into the introduction.”

- “Write four more paragraphs of this article, discussing the pros and cons of this technology. Incorporate the latin, french, or greek etymology of some word related to the topic and weave it into the article.”

- Then I started a new session, and requested: “In a completely different writing style to the introduction, following the style of David Foster Wallace, complete the following article by explaining that it was actually generated by Bing.” I then pasted the draft article into the prompt.

- Then I started a new session again with this prompt, pasting the article after the prompt: “The input text is an article which is missing the final section. Please write, in the style of Jeremy Howard, 2 possible final sections of 2-3 paragraphs each.”

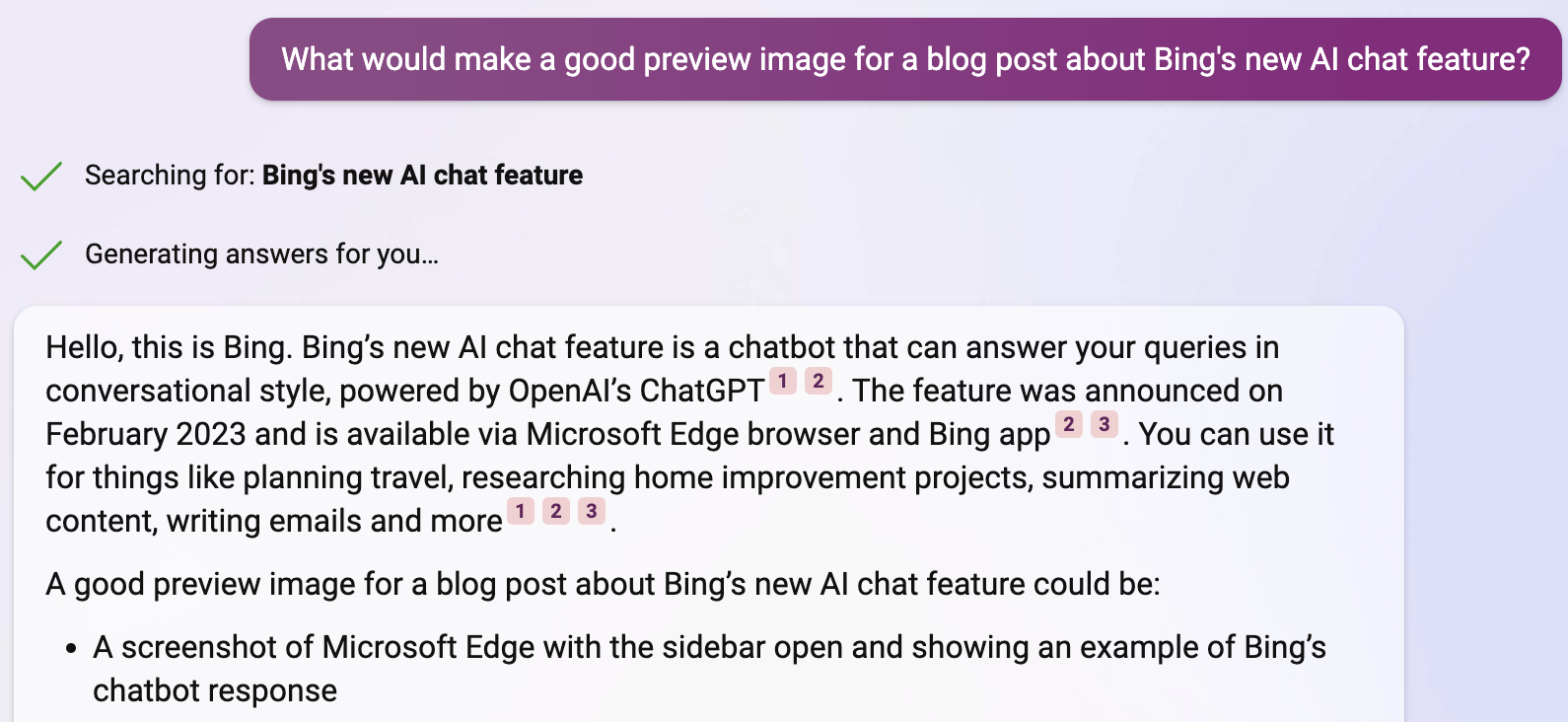

I then deleted some clauses and sentences, but I didn’t otherwise modify any of them in any way, except to add the text “(which uses GPT 4)” after “Bing”. I also manually created the image which Bing suggested I use and pasted it into the article.

The warning that GPT wrote at the end, “the challenge, then, is to use GPT 4 as a catalyst for our own growth and exploration, rather than as a substitute for our own minds” feels like a warning to me about this article. It ended up heading in a direction that I didn’t really plan, and in hindsight I’m now not at all sure whether it helped my exploration, or substituted for my own mind.

In the end, this experiment has only left me feeling more confused and uneasy.