Most artificially clever techniques are primarily based on neural networks, algorithms impressed by organic neurons discovered within the mind. These networks can encompass a number of layers, with inputs coming in a single aspect and outputs going out of the opposite. The outputs can be utilized to make automated selections, for instance, in driverless automobiles.

Assaults to mislead a neural community can contain exploiting vulnerabilities within the enter layers, however sometimes solely the preliminary enter layer is taken into account when engineering a protection. For the primary time, researchers augmented a neural community’s inside layers with a course of involving random noise to enhance its resilience.

Synthetic intelligence (AI) has grow to be a comparatively frequent factor; likelihood is you will have a smartphone with an AI assistant otherwise you use a search engine powered by AI. Whereas it is a broad time period that may embrace many various methods to basically course of info and generally make selections, AI techniques are sometimes constructed utilizing synthetic neural networks (ANN) analogous to these of the mind.

And just like the mind, ANNs can generally get confused, both by chance or by the deliberate actions of a 3rd occasion. Consider one thing like an optical phantasm—it’d make you are feeling like you’re looking at one factor when you find yourself actually one other.

The distinction between issues that confuse an ANN and issues that may confuse us, nonetheless, is that some visible enter may seem completely regular, or not less than may be comprehensible to us, however might nonetheless be interpreted as one thing fully completely different by an ANN.

A trivial instance may be an image-classifying system mistaking a cat for a canine, however a extra severe instance might be a driverless automobile mistaking a cease sign for a right-of-way signal. And it is not simply the already controversial instance of driverless automobiles; there are medical diagnostic techniques, and lots of different delicate functions that take inputs and inform, and even make, selections that may have an effect on folks.

As inputs aren’t essentially visible, it is not at all times simple to investigate why a system might need made a mistake at a look. Attackers attempting to disrupt a system primarily based on ANNs can make the most of this, subtly altering an anticipated enter sample in order that it will likely be misinterpreted, and the system will behave wrongly, maybe even problematically.

There are some protection methods for assaults like these, however they’ve limitations. Current graduate Jumpei Ukita and Professor Kenichi Ohki from the Division of Physiology on the College of Tokyo Graduate College of Drugs devised and examined a brand new approach to enhance ANN protection.

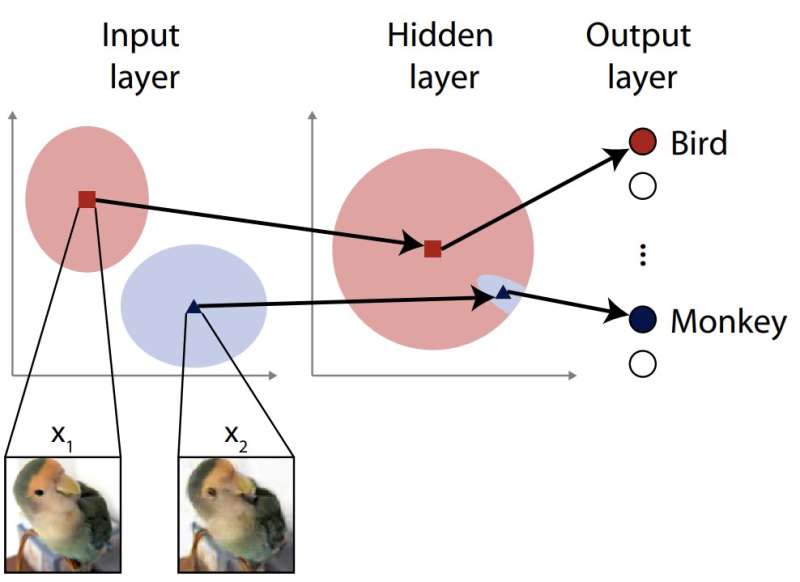

“Neural networks sometimes comprise layers of digital neurons. The primary layers will typically be chargeable for analyzing inputs by figuring out the weather that correspond to a sure enter,” stated Ohki.

“An attacker may provide a picture with artifacts that trick the community into misclassifying it. A typical protection for such an assault may be to intentionally introduce some noise into this primary layer. This sounds counterintuitive that it’d assist, however by doing so, it permits for better diversifications to a visible scene or different set of inputs. Nevertheless, this technique is just not at all times so efficient and we thought we may enhance the matter by trying past the enter layer to additional contained in the community.”

Ukita and Ohki aren’t simply laptop scientists. They’ve additionally studied the human mind, and this impressed them to make use of a phenomenon they knew about there in an ANN. This was so as to add noise not solely to the enter layer, however to deeper layers as nicely. That is sometimes prevented because it’s feared that it’s going to affect the effectiveness of the community beneath regular circumstances. However the duo discovered this to not be the case, and as a substitute the noise promoted better adaptability of their take a look at ANN, which decreased its susceptibility to simulated adversarial assaults.

“Our first step was to plot a hypothetical technique of assault that strikes deeper than the enter layer. Such an assault would wish to face up to the resilience of a community with an ordinary noise protection on its enter layer. We name these feature-space adversarial examples,” stated Ukita.

“These assaults work by supplying an enter deliberately removed from, quite than close to to, the enter that an ANN can appropriately classify. However the trick is to current subtly deceptive artifacts to the deeper layers as a substitute. As soon as we demonstrated the hazard from such an assault, we injected random noise into the deeper hidden layers of the community to spice up their adaptability and subsequently defensive functionality. We’re comfortable to report it really works.”

Whereas the brand new thought does show sturdy, the staff needs to develop it additional to make it much more efficient towards anticipated assaults, in addition to other forms of assaults they haven’t but examined it towards. At current, the protection solely works on this particular form of assault.

“Future attackers may attempt to think about assaults that may escape the feature-space noise we thought-about on this analysis,” stated Ukita. “Certainly, assault and protection are two sides of the identical coin; it is an arms race that neither aspect will again down from, so we have to regularly iterate, enhance and innovate new concepts so as to defend the techniques we use on daily basis.”

The analysis is printed within the journal Neural Networks.

Extra info:

Jumpei Ukita et al, Adversarial assaults and defenses utilizing feature-space stochasticity, Neural Networks (2023). DOI: 10.1016/j.neunet.2023.08.022

College of Tokyo

Quotation:

Utilizing the mind as a mannequin conjures up a extra sturdy AI (2023, September 15)

retrieved 15 September 2023

from

This doc is topic to copyright. Other than any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is offered for info functions solely.