In recent times, synthetic intelligence brokers have succeeded in a spread of advanced sport environments. As an example, AlphaZero beat world-champion packages in chess, shogi, and Go after beginning out with figuring out not more than the essential guidelines of learn how to play. Via reinforcement learning (RL), this single system learnt by enjoying spherical after spherical of video games via a repetitive means of trial and error. However AlphaZero nonetheless skilled individually on every sport — unable to easily be taught one other sport or process with out repeating the RL course of from scratch. The identical is true for different successes of RL, akin to Atari, Capture the Flag, StarCraft II, Dota 2, and Hide-and-Seek. DeepMind’s mission of fixing intelligence to advance science and humanity led us to discover how we may overcome this limitation to create AI brokers with extra normal and adaptive behaviour. As an alternative of studying one sport at a time, these brokers would be capable of react to fully new situations and play an entire universe of video games and duties, together with ones by no means seen earlier than.

As we speak, we revealed “Open-Ended Learning Leads to Generally Capable Agents,” a preprint detailing our first steps to coach an agent able to enjoying many various video games without having human interplay information. We created an enormous sport atmosphere we name XLand, which incorporates many multiplayer video games inside constant, human-relatable 3D worlds. This atmosphere makes it attainable to formulate new studying algorithms, which dynamically management how an agent trains and the video games on which it trains. The agent’s capabilities enhance iteratively as a response to the challenges that come up in coaching, with the educational course of frequently refining the coaching duties so the agent by no means stops studying. The result’s an agent with the flexibility to succeed at a large spectrum of duties — from easy object-finding issues to advanced video games like conceal and search and seize the flag, which weren’t encountered throughout coaching. We discover the agent displays normal, heuristic behaviours akin to experimentation, behaviours which might be extensively relevant to many duties reasonably than specialised to a person process. This new method marks an vital step towards creating extra normal brokers with the flexibleness to adapt quickly inside continuously altering environments.

A universe of coaching duties

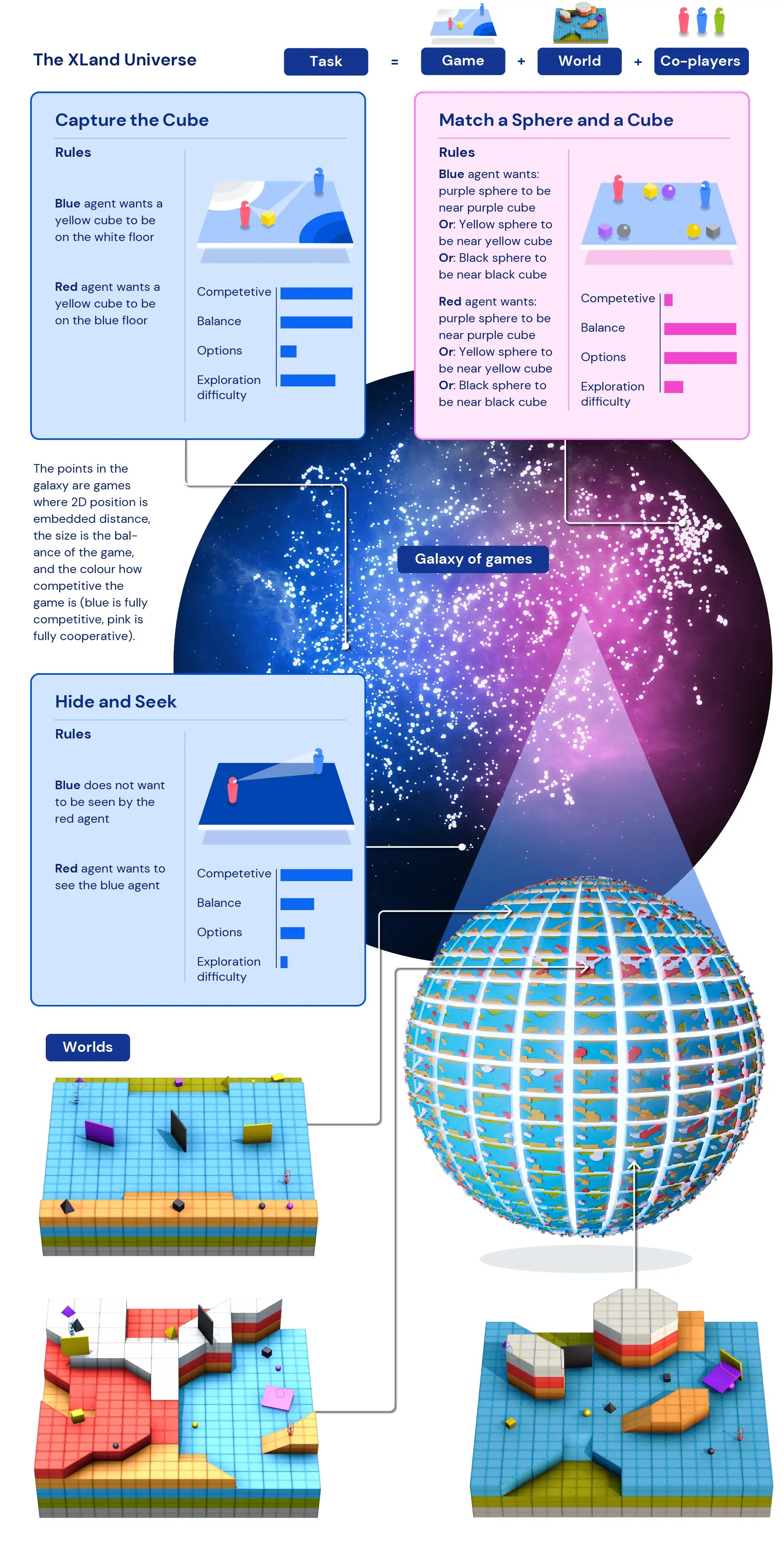

An absence of coaching information — the place “information” factors are totally different duties — has been one of many main elements limiting RL-trained brokers’ behaviour being normal sufficient to use throughout video games. With out with the ability to prepare brokers on an enormous sufficient set of duties, brokers skilled with RL have been unable to adapt their learnt behaviours to new duties. However by designing a simulated area to permit for procedurally generated tasks, our crew created a technique to prepare on, and generate expertise from, duties which might be created programmatically. This allows us to incorporate billions of duties in XLand, throughout various video games, worlds, and gamers.

Our AI brokers inhabit 3D first-person avatars in a multiplayer atmosphere meant to simulate the bodily world. The gamers sense their environment by observing RGB pictures and obtain a textual content description of their aim, and so they prepare on a spread of video games. These video games are so simple as cooperative video games to seek out objects and navigate worlds, the place the aim for a participant might be “be close to the purple dice.” Extra advanced video games may be primarily based on selecting from a number of rewarding choices, akin to “be close to the purple dice or put the yellow sphere on the crimson flooring,” and extra aggressive video games embody enjoying in opposition to co-players, akin to symmetric conceal and search the place every participant has the aim, “see the opponent and make the opponent not see me.” Every sport defines the rewards for the gamers, and every participant’s final goal is to maximise the rewards.

As a result of XLand may be programmatically specified, the sport area permits for information to be generated in an automatic and algorithmic style. And since the duties in XLand contain a number of gamers, the behaviour of co-players drastically influences the challenges confronted by the AI agent. These advanced, non-linear interactions create a great supply of knowledge to coach on, since typically even small modifications within the parts of the atmosphere can lead to giant modifications within the challenges for the brokers.

Coaching strategies

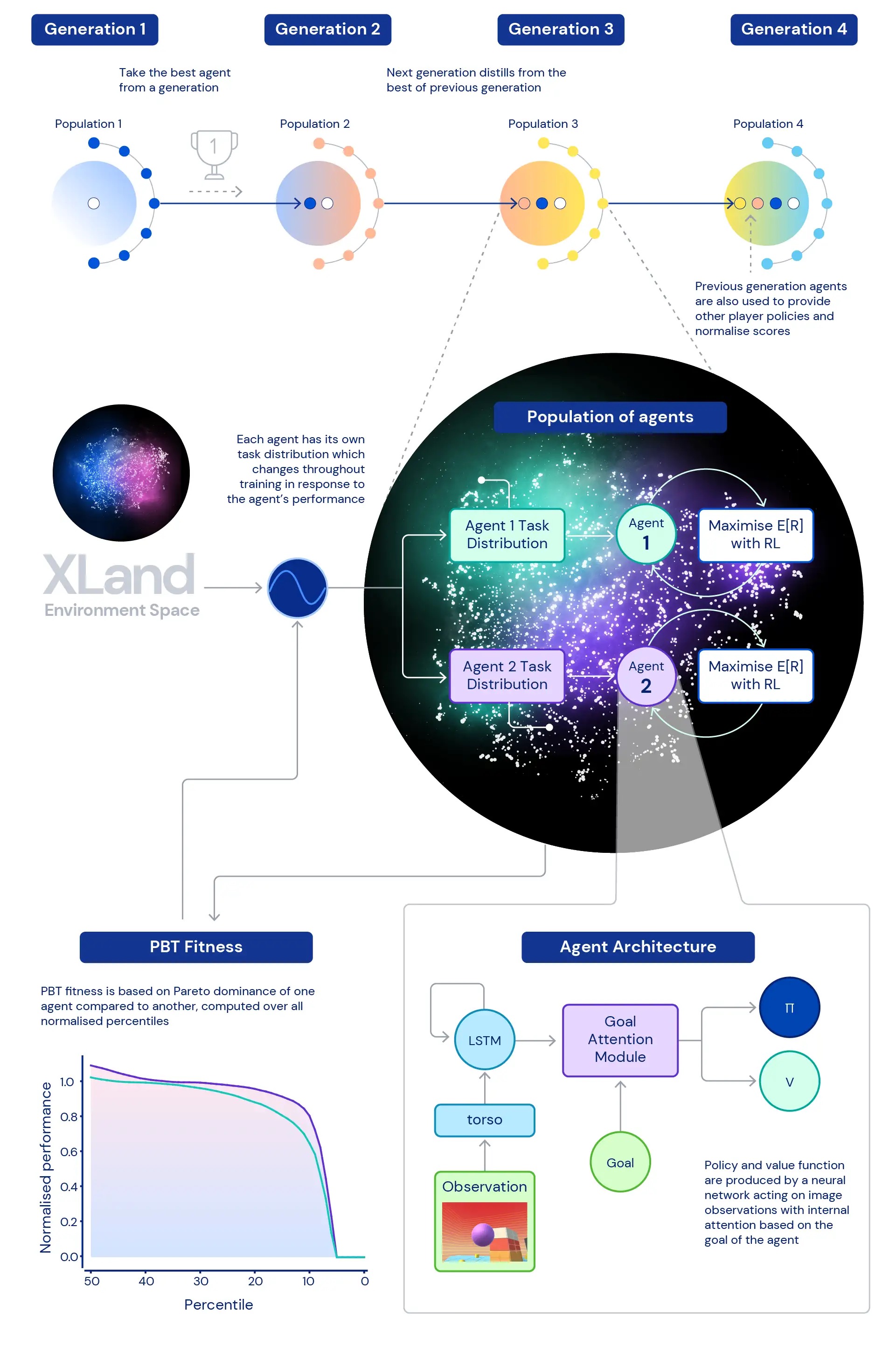

Central to our analysis is the function of deep RL in coaching the neural networks of our brokers. The neural community structure we use offers an consideration mechanism over the agent’s inside recurrent state — serving to information the agent’s consideration with estimates of subgoals distinctive to the sport the agent is enjoying. We’ve discovered this goal-attentive agent (GOAT) learns extra typically succesful insurance policies.

We additionally explored the query, what distribution of coaching duties will produce the absolute best agent, particularly in such an enormous atmosphere? The dynamic process era we use permits for continuous modifications to the distribution of the agent’s coaching duties: each process is generated to be neither too exhausting nor too straightforward, however excellent for coaching. We then use population based training (PBT) to regulate the parameters of the dynamic process era primarily based on a health that goals to enhance brokers’ normal functionality. And eventually we chain collectively a number of coaching runs so every era of brokers can bootstrap off the earlier era.

This results in a closing coaching course of with deep RL on the core updating the neural networks of brokers with each step of expertise:

- the steps of expertise come from coaching duties which might be dynamically generated in response to brokers’ behaviour,

- brokers’ task-generating capabilities mutate in response to brokers’ relative efficiency and robustness,

- on the outermost loop, the generations of brokers bootstrap from one another, present ever richer co-players to the multiplayer atmosphere, and redefine the measurement of development itself.

The coaching course of begins from scratch and iteratively builds complexity, continuously altering the educational drawback to maintain the agent studying. The iterative nature of the mixed studying system, which doesn’t optimise a bounded efficiency metric however reasonably the iteratively outlined spectrum of normal functionality, results in a probably open-ended studying course of for brokers, restricted solely by the expressivity of the atmosphere area and agent neural community.

Measuring progress

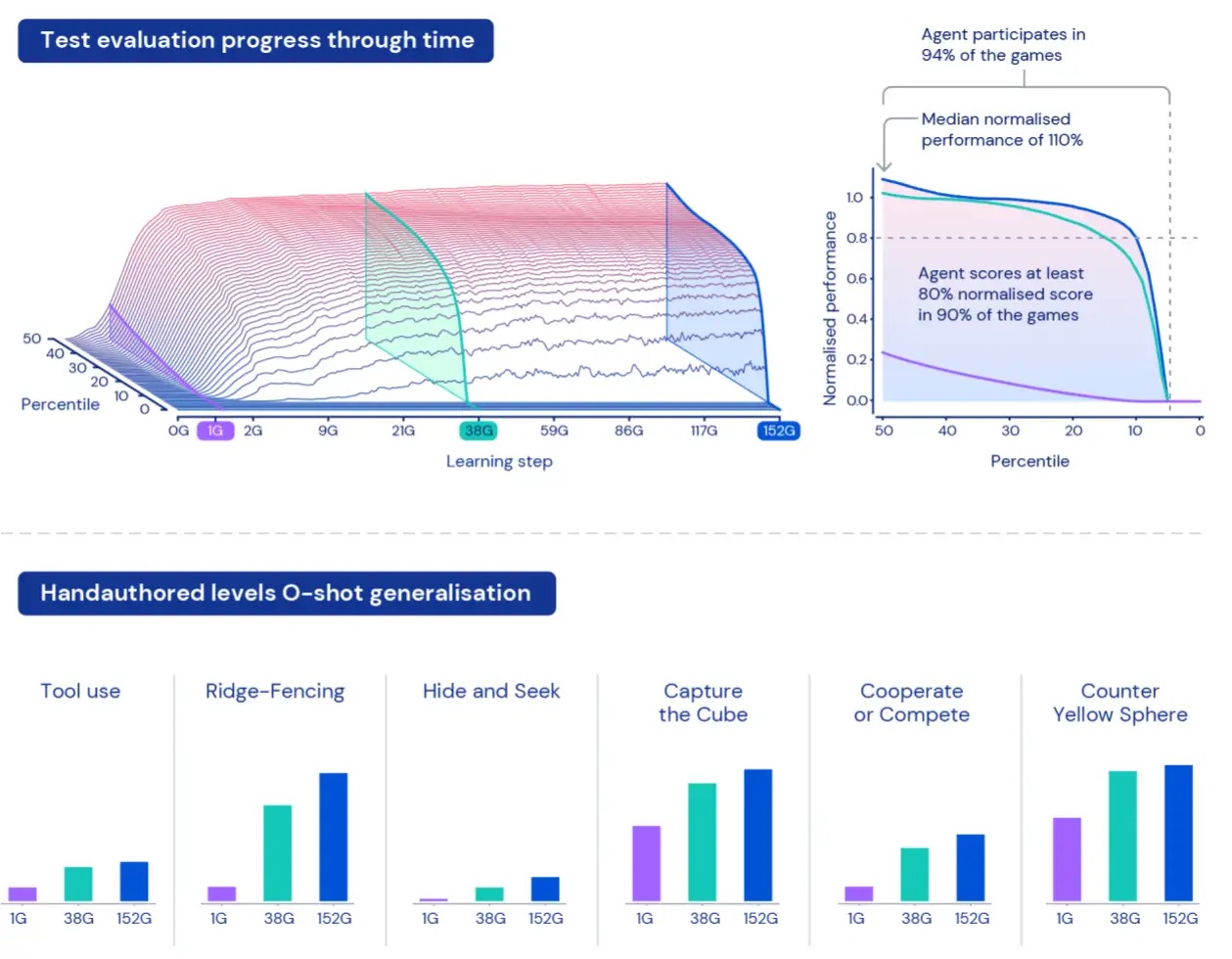

To measure how brokers carry out inside this huge universe, we create a set of analysis duties utilizing video games and worlds that stay separate from the info used for coaching. These “held-out” duties embody particularly human-designed duties like conceal and search and seize the flag.

Due to the dimensions of XLand, understanding and characterising the efficiency of our brokers generally is a problem. Every process includes totally different ranges of complexity, totally different scales of achievable rewards, and totally different capabilities of the agent, so merely averaging the reward over held out duties would conceal the precise variations in complexity and rewards — and would successfully deal with all duties as equally fascinating, which isn’t essentially true of procedurally generated environments.

To beat these limitations, we take a special method. Firstly, we normalise scores per process utilizing the Nash equilibrium worth computed utilizing our present set of skilled gamers. Secondly, we bear in mind the complete distribution of normalised scores — reasonably than common normalised scores, we have a look at the totally different percentiles of normalised scores — in addition to the proportion of duties through which the agent scores no less than one step of reward: participation. This implies an agent is taken into account higher than one other agent provided that it exceeds efficiency on all percentiles. This method to measurement offers us a significant technique to assess our brokers’ efficiency and robustness.

Extra typically succesful brokers

After coaching our brokers for 5 generations, we noticed constant enhancements in studying and efficiency throughout our held-out analysis area. Enjoying roughly 700,000 distinctive video games in 4,000 distinctive worlds inside XLand, every agent within the closing era skilled 200 billion coaching steps because of 3.4 million distinctive duties. Right now, our brokers have been in a position to take part in each procedurally generated analysis process apart from a handful that had been unimaginable even for a human. And the outcomes we’re seeing clearly exhibit normal, zero-shot behaviour throughout the duty area — with the frontier of normalised rating percentiles frequently enhancing.

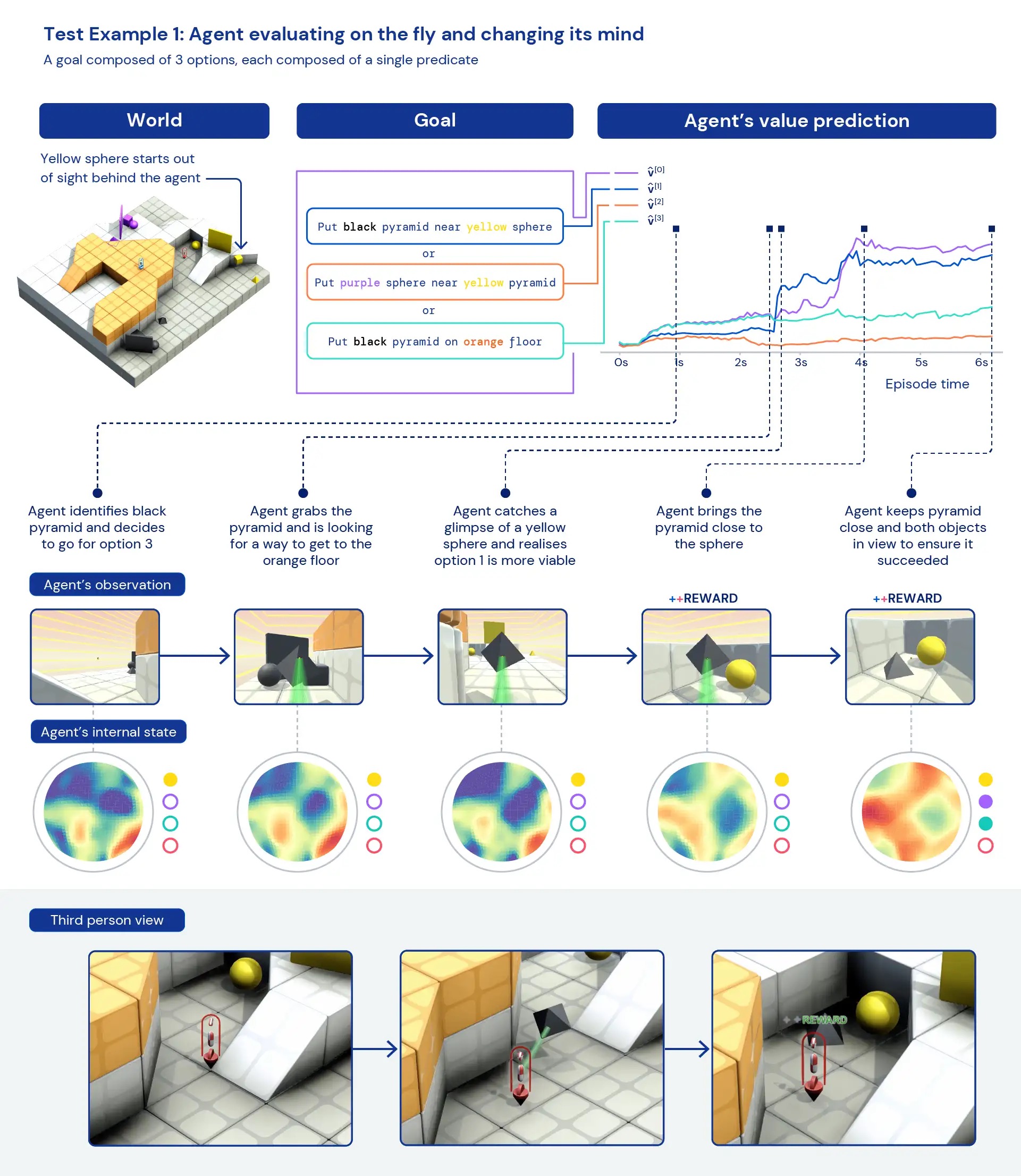

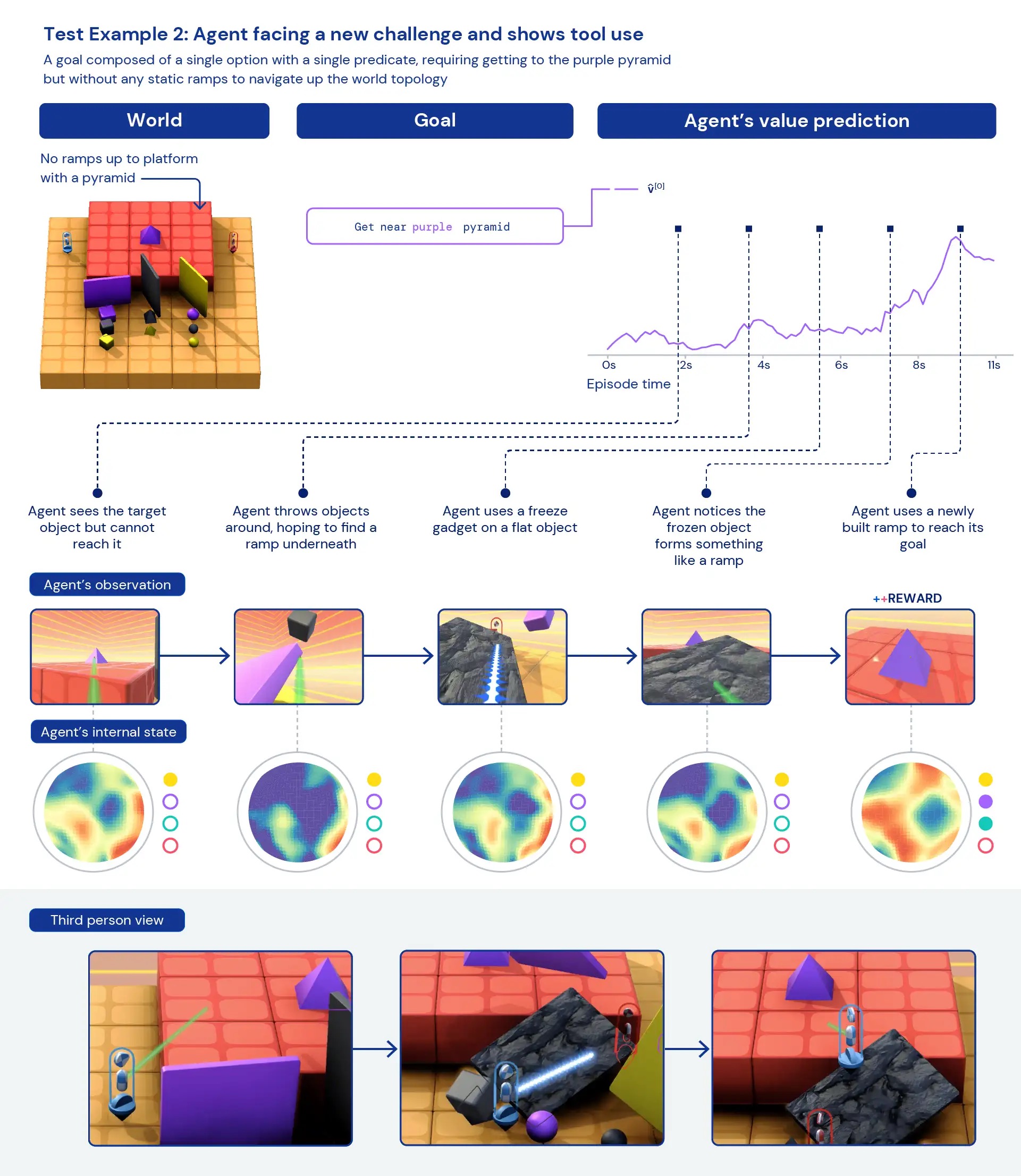

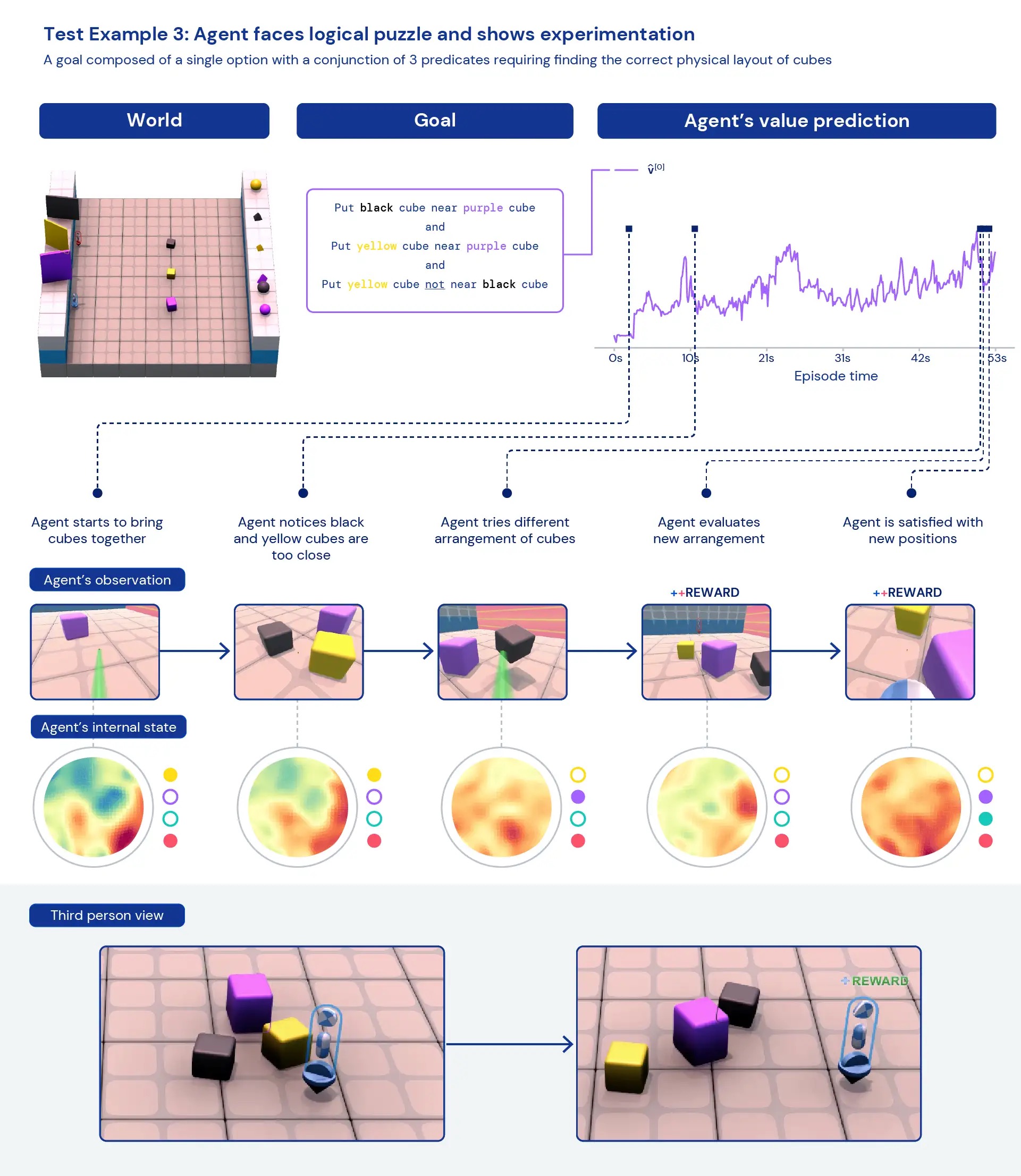

Wanting qualitatively at our brokers, we frequently see normal, heuristic behaviours emerge — reasonably than extremely optimised, particular behaviours for particular person duties. As an alternative of brokers figuring out precisely the “neatest thing” to do in a brand new scenario, we see proof of brokers experimenting and altering the state of the world till they’ve achieved a rewarding state. We additionally see brokers depend on using different instruments, together with objects to occlude visibility, to create ramps, and to retrieve different objects. As a result of the atmosphere is multiplayer, we will look at the development of agent behaviours whereas coaching on held-out social dilemmas, akin to in a sport of “chicken”. As coaching progresses, our brokers seem to exhibit extra cooperative behaviour when enjoying with a duplicate of themselves. Given the character of the atmosphere, it’s tough to pinpoint intentionality — the behaviours we see typically look like unintentional, however nonetheless we see them happen constantly.

Analysing the agent’s inside representations, we will say that by taking this method to reinforcement studying in an enormous process area, our brokers are conscious of the fundamentals of their our bodies and the passage of time and that they perceive the high-level construction of the video games they encounter. Maybe much more apparently, they clearly recognise the reward states of their atmosphere. This generality and variety of behaviour in new duties hints towards the potential to fine-tune these brokers on downstream duties. As an example, we present within the technical paper that with simply half-hour of centered coaching on a newly introduced advanced process, the brokers can rapidly adapt, whereas brokers skilled with RL from scratch can not be taught these duties in any respect.

By growing an atmosphere like XLand and new coaching algorithms that help the open-ended creation of complexity, we’ve seen clear indicators of zero-shot generalisation from RL brokers. While these brokers are beginning to be typically succesful inside this process area, we sit up for persevering with our analysis and improvement to additional enhance their efficiency and create ever extra adaptive brokers.

For extra particulars, see the preprint of our technical paper — and videos of the results we’ve seen. We hope this might assist different researchers likewise see a brand new path towards creating extra adaptive, typically succesful AI brokers. Should you’re excited by these advances, contemplate joining our team.