It seems that if you put collectively two AI specialists, each of whom previously labored at Meta researching accountable AI, magic occurs. The founders of Patronus AI got here collectively final March to construct an answer to guage and take a look at giant language fashions with an eye fixed in direction of regulated industries the place there’s little tolerance for errors.

Rebecca Qian, who’s CTO on the firm, led accountable NLP analysis at Meta AI, whereas her cofounder CEO Anand Kannappan was answerable for accountable machine studying frameworks at Meta Labs. At the moment their startup is having a giant day, launching from stealth, whereas making their product usually accessible, and in addition asserting a $3 million seed spherical.

The corporate is in the suitable place on the proper time, constructing a safety and evaluation framework within the type of a managed service for testing giant language fashions to determine areas that might be problematic, notably the probability of hallucinations, the place the mannequin makes up a solution as a result of it lacks the info to reply appropriately.

“In our product we actually search to automate and scale the total course of and mannequin analysis to alert customers once we determine points,” Qian advised TechCrunch.

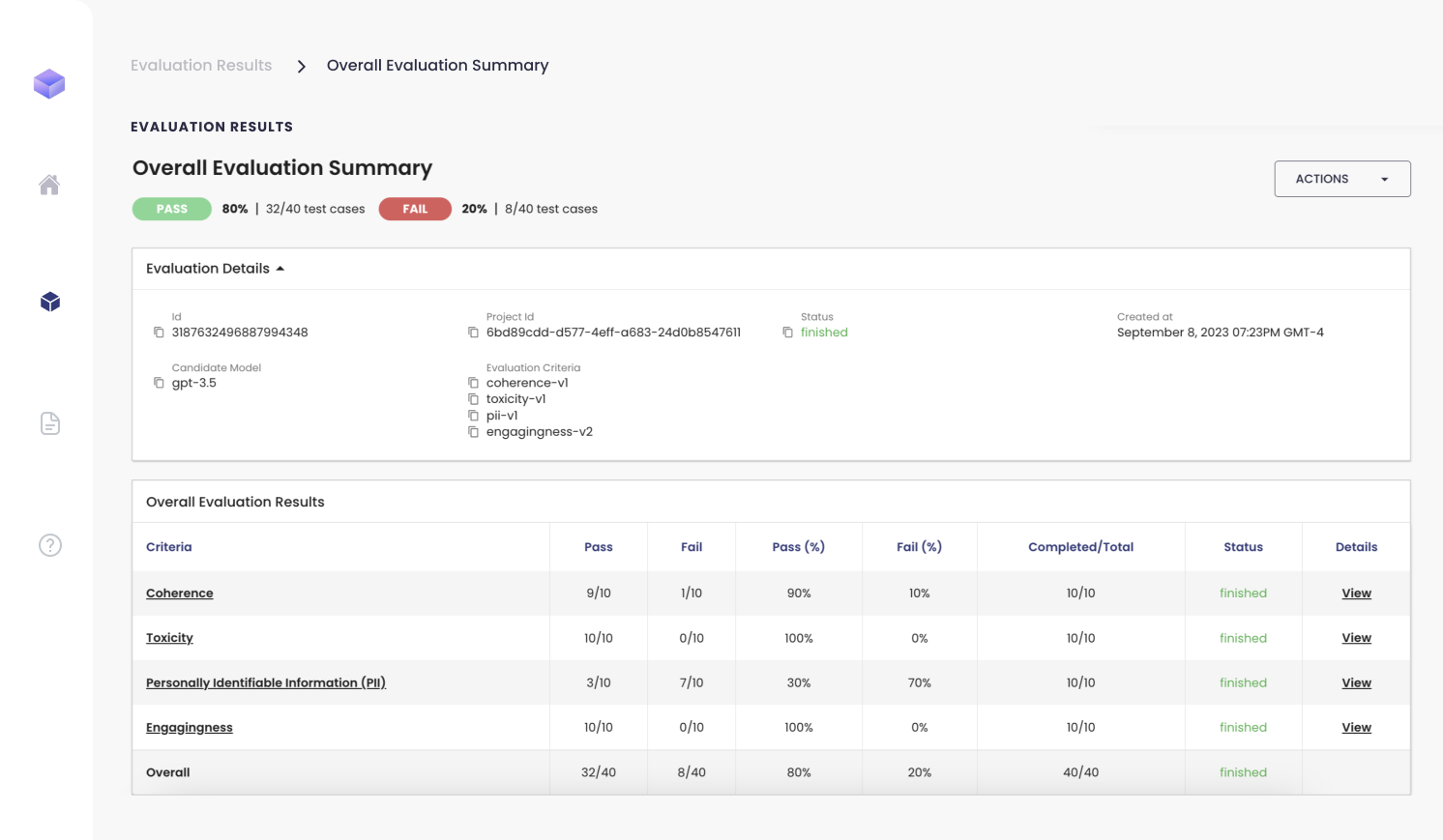

She says this entails three steps. “The primary is scoring, the place we assist customers truly rating fashions in actual world eventualities, akin to finance taking a look at key standards akin to hallucinations,” she stated. Subsequent, the product builds take a look at instances, that means it mechanically generates adversarial take a look at suites and stress assessments the fashions towards these assessments. Lastly, it benchmarks fashions utilizing numerous standards, relying on the necessities, to search out one of the best mannequin for a given job. “We evaluate completely different fashions to assist customers determine one of the best mannequin for his or her particular use case. So for instance, one mannequin might need the next failure price and hallucinations in comparison with a special base mannequin,” she stated.

Picture Credit: Patronus AI

The corporate is concentrating on extremely regulated industries the place mistaken solutions may have massive penalties. “We assist corporations be certain the big language fashions they’re utilizing are protected. We detect cases the place their fashions produce business-sensitive data and inappropriate outputs,” Kannappan defined.

He says the startup’s purpose is to be a trusted third celebration with regards to evaluating fashions. “It’s simple for somebody to say their LLM is one of the best, however there must be an unbiased, unbiased perspective. That’s the place we are available in. Patronus is the credibility checkmark,” he stated.

The corporate plans to make use of a usage-based pricing mannequin as a result of it’s very depending on the quantity of evaluations and samples that the corporate and the engineering workforce desires to guage towards.

It presently has six full time workers, however they are saying given how rapidly the house is rising, they plan to rent extra folks within the coming months with out committing to a precise quantity. Qian says range is a key pillar of the corporate. “It’s one thing we care deeply about. And it begins on the management degree at Patronus. As we develop, we intend to proceed to institute applications and initiatives to verify we’re creating and sustaining an inclusive workspace,” she stated.

At the moment’s $3 million seed was led by Lightspeed Enterprise Companions with participation from Factorial Capital and different trade angels.

#Patronus #conjures #LLM #analysis #instrument #regulated #industries