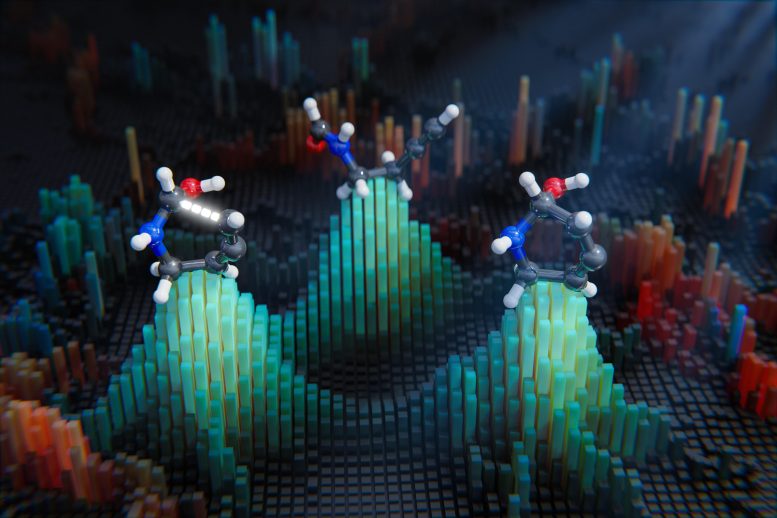

MIT chemists have developed a computational model that can rapidly predict the structure of the transition state of a reaction (left structure), if it is given the structure of a reactant (middle) and product (right). Credit: David W. Kastner

Utilizing generative artificial intelligence, chemists from MIT developed a model that can predict the structures formed when a chemical reaction reaches its point of no return

During a chemical reaction, molecules gain energy until they reach what’s known as the transition state — a point of no return from which the reaction must proceed. This state is so fleeting that it’s nearly impossible to observe it experimentally.

The structures of these transition states can be calculated using techniques based on quantum chemistry, but that process is extremely time-consuming. A team of MIT researchers has now developed an alternative approach, based on machine learning, that can calculate these structures much more quickly — within a few seconds.

Their new model could be used to help chemists design new reactions and catalysts to generate useful products like fuels or drugs, or to model naturally occurring chemical reactions such as those that might have helped to drive the evolution of life on Earth.

“Knowing that transition state structure is really important as a starting point for thinking about designing catalysts or understanding how natural systems enact certain transformations,” says Heather Kulik, an associate professor of chemistry and chemical engineering at MIT, and the senior author of the study.

Chenru Duan PhD ’22 is the lead author of a paper describing the work, which appears today in Nature Computational Science. Cornell University graduate student Yuanqi Du and MIT graduate student Haojun Jia are also authors of the paper.

Fleeting transitions

For any given chemical reaction to occur, it must go through a transition state, which takes place when it reaches the energy threshold needed for the reaction to proceed. The probability of any chemical reaction occurring is partly determined by how likely it is that the transition state will form.

“The transition state helps to determine the likelihood of a chemical transformation happening. If we have a lot of something that we don’t want, like carbon dioxide, and we’d like to convert it to a useful fuel like methanol, the transition state and how favorable that is determines how likely we are to get from the reactant to the product,” Kulik says.

Chemists can calculate transition states using a quantum chemistry method known as density functional theory. However, this method requires a huge amount of computing power and can take many hours or even days to calculate just one transition state.

Recently, some researchers have tried to use machine-learning models to discover transition state structures. However, models developed so far require considering two reactants as a single entity in which the reactants maintain the same orientation with respect to each other. Any other possible orientations must be modeled as separate reactions, which adds to the computation time.

“If the reactant molecules are rotated, then in principle, before and after this rotation they can still undergo the same chemical reaction. But in the traditional machine-learning approach, the model will see these as two different reactions. That makes the machine-learning training much harder, as well as less accurate,” Duan says.

The MIT team developed a new computational approach that allowed them to represent two reactants in any arbitrary orientation with respect to each other, using a type of model known as a diffusion model, which can learn which types of processes are most likely to generate a particular outcome. As training data for their model, the researchers used structures of reactants, products, and transition states that had been calculated using quantum computation methods, for 9,000 different chemical reactions.

“Once the model learns the underlying distribution of how these three structures coexist, we can give it new reactants and products, and it will try to generate a transition state structure that pairs with those reactants and products,” Duan says.

The researchers tested their model on about 1,000 reactions that it hadn’t seen before, asking it to generate 40 possible solutions for each transition state. They then used a “confidence model” to predict which states were the most likely to occur. These solutions were accurate to within 0.08 angstroms (one hundred-millionth of a centimeter) when compared to transition state structures generated using quantum techniques. The entire computational process takes just a few seconds for each reaction.

“You can imagine that really scales to thinking about generating thousands of transition states in the time that it would normally take you to generate just a handful with the conventional method,” Kulik says.

Modeling reactions

Although the researchers trained their model primarily on reactions involving compounds with a relatively small number of atoms — up to 23 atoms for the entire system — they found that it could also make accurate predictions for reactions involving larger molecules.

“Even if you look at bigger systems or systems catalyzed by enzymes, you’re getting pretty good coverage of the different types of ways that atoms are most likely to rearrange,” Kulik says.

The researchers now plan to expand their model to incorporate other components such as catalysts, which could help them investigate how much a particular catalyst would speed up a reaction. This could be useful for developing new processes for generating pharmaceuticals, fuels, or other useful compounds, especially when the synthesis involves many chemical steps.

“Traditionally all of these calculations are performed with quantum chemistry, and now we’re able to replace the quantum chemistry part with this fast generative model,” Duan says.

Another potential application for this kind of model is exploring the interactions that might occur between gases found on other planets, or to model the simple reactions that may have occurred during the early evolution of life on Earth, the researchers say.

Reference: “Accurate transition state generation with an object-aware equivariant elementary reaction diffusion model” by Chenru Duan, Yuanqi Du, Haojun Jia and Heather J. Kulik, 15 December 2023, Nature Computational Science.

DOI: 10.1038/s43588-023-00563-7

The research was funded by the U.S. Office of Naval Research and the National Science Foundation.