I remember the first time I used the v1.0 of Visual Basic. Back then, it was a program for DOS. Before it, writing programs was extremely complex and I’d never managed to make much progress beyond the most basic toy applications. But with VB, I drew a button on the screen, typed in a single line of code that I wanted to run when that button was clicked, and I had a complete application I could now run. It was such an amazing experience that I’ll never forget that feeling.

It felt like coding would never be the same again.

Writing code in Mojo, a new programming language from Modular is the second time in my life I’ve had that feeling. Here’s what it looks like:

Why not just use Python?

Before I explain why I’m so excited about Mojo, I first need to say a few things about Python.

Python is the language that I have used for nearly all my work over the last few years. It is a beautiful language. It has an elegant core, on which everything else is built. This approach means that Python can (and does) do absolutely anything. But it comes with a downside: performance.

A few percent here or there doesn’t matter. But Python is many thousands of times slower than languages like C++. This makes it impractical to use Python for the performance-sensitive parts of code – the inner loops where performance is critical.

However, Python has a trick up its sleeve: it can call out to code written in fast languages. So Python programmers learn to avoid using Python for the implementation of performance-critical sections, instead using Python wrappers over C, FORTRAN, Rust, etc code. Libraries like Numpy and PyTorch provide “pythonic” interfaces to high performance code, allowing Python programmers to feel right at home, even as they’re using highly optimised numeric libraries.

Nearly all AI models today are developed in Python, thanks to the flexible and elegant programming language, fantastic tools and ecosystem, and high performance compiled libraries.

But this “two-language” approach has serious downsides. For instance, AI models often have to be converted from Python into a faster implementation, such as ONNX or torchscript. But these deployment approaches can’t support all of Python’s features, so Python programmers have to learn to use a subset of the language that matches their deployment target. It’s very hard to profile or debug the deployment version of the code, and there’s no guarantee it will even run identically to the python version.

The two-language problem gets in the way of learning. Instead of being able to step into the implementation of an algorithm while your code runs, or jump to the definition of a method of interest, instead you find yourself deep in the weeds of C libraries and binary blobs. All coders are learners (or at least, they should be) because the field constantly develops, and no-one can understand it all. So difficulties learning and problems for experienced devs just as much as it is for students starting out.

The same problem occurs when trying to debug code or find and resolve performance problems. The two-language problem means that the tools that Python programmers are familiar with no longer apply as soon as we find ourselves jumping into the backend implementation language.

There are also unavoidable performance problems, even when a faster compiled implementation language is used for a library. One major issue is the lack of “fusion” – that is, calling a bunch of compiled functions in a row leads to a lot of overhead, as data is converted to and from python formats, and the cost of switching from python to C and back repeatedly must be paid. So instead we have to write special “fused” versions of common combinations of functions (such as a linear layer followed by a rectified linear layer in a neural net), and call these fused versions from Python. This means there’s a lot more library functions to implement and remember, and you’re out of luck if you’re doing anything even slightly non-standard because there won’t be a fused version for you.

We also have to deal with the lack of effective parallel processing in Python. Nowadays we all have computers with lots of cores, but Python generally will just use one at a time. There are some clunky ways to write parallel code which uses more than one core, but they either have to work on totally separate memory (and have a lot of overhead to start up) or they have to take it in turns to access memory (the dreaded “global interpreter lock” which often makes parallel code actually slower than single-threaded code!)

Libraries like PyTorch have been developing increasingly ingenious ways to deal with these performance problems, with the newly released PyTorch 2 even including a compile() function that uses a sophisticated compilation backend to create high performance implementations of Python code. However, functionality like this can’t work magic: there are fundamental limitations on what’s possible with Python based on how the language itself is designed.

You might imagine that in practice there’s just a small number of building blocks for AI models, and so it doesn’t really matter if we have to implement each of these in C. Besides which, they’re pretty basic algorithms on the whole anyway, right? For instance, transformers models are nearly entirely implemented by multiple layers of two components, multilayer perceptrons (MLP) and attention, which can be implemented with just a few lines of Python with PyTorch. Here’s the implementation of an MLP:

nn.Sequential(nn.Linear(ni,nh), nn.GELU(), nn.LayerNorm(nh), nn.Linear(nh,ni))…and here’s a self-attention layer:

def forward(self, x):

x = self.qkv(self.norm(x))

x = rearrange(x, 'n s (h d) -> (n h) s d', h=self.nheads)

q,k,v = torch.chunk(x, 3, dim=-1)

s = (q@k.transpose(1,2))/self.scale

x = s.softmax(dim=-1)@v

x = rearrange(x, '(n h) s d -> n s (h d)', h=self.nheads)

return self.proj(x)But this hides the fact that real-world implementations of these operations are far more complex. For instance check out this memory optimised “flash attention” implementation in CUDA C. It also hides the fact that there are huge amounts of performance being left on the table by these generic approaches to building models. For instance, “block sparse” approaches can dramatically improve speed and memory use. Researchers are working on tweaks to nearly every part of common architectures, and coming up with new architectures (and SGD optimisers, and data augmentation methods, etc) – we’re not even close to having some neatly wrapped-up system that everyone will use forever more.

In practice, much of the fastest code today used for language models is being written in C and C++. For instance, Fabrice Bellard’s TextSynth and Georgi Gerganov’s ggml both use C, and as a result are able to take full advantage of the performance benefits of fully compiled languages.

Enter Mojo

Chris Lattner is responsible for creating many of the projects that we all rely on today – even although we might not even have heard of all the stuff he built! As part of his PhD thesis he started the development of LLVM, which fundamentally changed how compilers are created, and today forms the foundation of many of the most widely used language ecosystems in the world. He then went on to launch Clang, a C and C++ compiler that sits on top of LLVM, and is used by most of the world’s most significant software developers (including providing the backbone for Google’s performance critical code). LLVM includes an “intermediate representation” (IR), a special language designed for machines to read and write (instead of for people), which has enabled a huge community of software to work together to provide better programming language functionality across a wider range of hardware.

Chris saw that C and C++ however didn’t really fully leverage the power of LLVM, so while he was working at Apple he designed a new language, called “Swift”, which he describes as “syntax sugar for LLVM”. Swift has gone on to become one of the world’s most widely used programming languages, in particular because it is today the main way to create iOS apps for iPhone, iPad, MacOS, and Apple TV.

Unfortunately, Apple’s control of Swift has meant it hasn’t really had its time to shine outside of the cloistered Apple world. Chris led a team for a while at Google to try to move Swift out of its Apple comfort zone, to become a replacement for Python in AI model development. I was very excited about this project, but sadly it did not receive the support it needed from either Apple or from Google, and it was not ultimately successful.

Having said that, whilst at Google Chris did develop another project which became hugely successful: MLIR. MLIR is a replacement for LLVM’s IR for the modern age of many-core computing and AI workloads. It’s critical for fully leveraging the power of hardware like GPUs, TPUs, and the vector units increasingly being added to server-class CPUs.

So, if Swift was “syntax sugar for LLVM”, what’s “syntax sugar for MLIR”? The answer is: Mojo! Mojo is a brand new language that’s designed to take full advantage of MLIR. And also Mojo is Python.

Wait what?

OK let me explain. Maybe it’s better to say Mojo is Python++. It will be (when complete) a strict superset of the Python language. But it also has additional functionality so we can write high performance code that takes advantage of modern accelerators.

Mojo seems to me like a more pragmatic approach than Swift. Whereas Swift was a brand new language packing all kinds of cool features based on latest research in programming language design, Mojo is, at its heart, just Python. This seems wise, not just because Python is already well understood by millions of coders, but also because after decades of use its capabilities and limitations are now well understood. Relying on the latest programming language research is pretty cool, but its potentially-dangerous speculation because you never really know how things will turn out. (I will admit that personally, for instance, I often got confused by Swift’s powerful but quirky type system, and sometimes even managed to confuse the Swift compiler and blew it up entirely!)

A key trick in Mojo is that you can opt in at any time to a faster “mode” as a developer, by using “fn” instead of “def” to create your function. In this mode, you have to declare exactly what the type of every variable is, and as a result Mojo can create optimised machine code to implement your function. Furthermore, if you use “struct” instead of “class”, your attributes will be tightly packed into memory, such that they can even be used in data structures without chasing pointers around. These are the kinds of features that allow languages like C to be so fast, and now they’re accessible to Python programmers too – just by learning a tiny bit of new syntax.

How is this possible?

There has, at this point, been hundreds of attempts over decades to create programming languages which are concise, flexible, fast, practical, and easy to use – without much success. But somehow, Modular seems to have done it. How could this be? There are a couple of hypotheses we might come up with:

- Mojo hasn’t actually achieved these things, and the snazzy demo hides disappointing real-life performance, or

- Modular is a huge company with hundreds of developers working for years, putting in more hours in order to achieve something that’s not been achieved before.

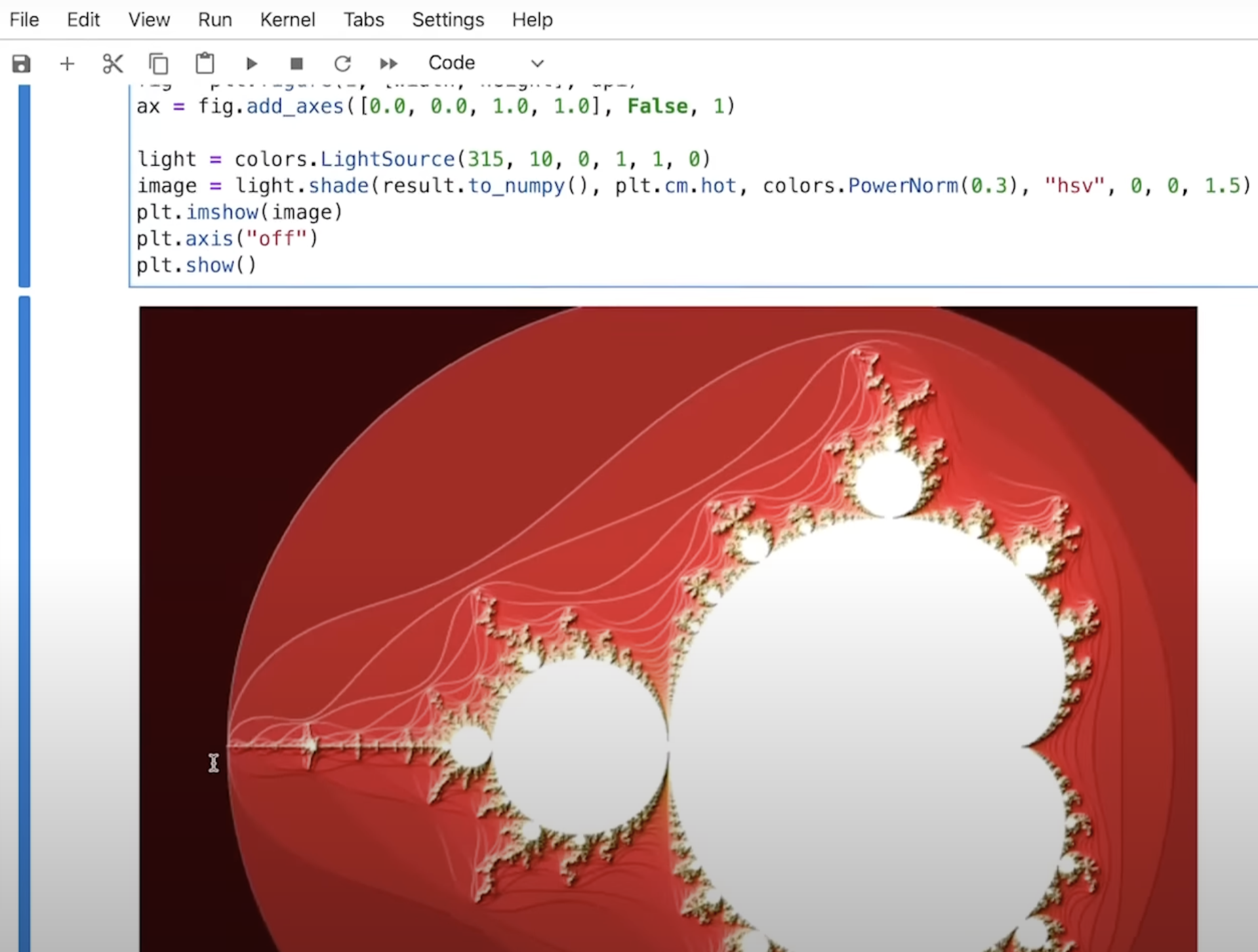

Neither of these things are true. The demo, in fact, was created in just a few days before I recorded the video. The two examples we gave (matmul and mandelbrot) were not carefully chosen as being the only things that happened to work after trying dozens of approaches; rather, they were the only things we tried for the demo and they worked first time! Whilst there’s plenty of missing features at this early stage (Mojo isn’t even released to the public yet, other than an online “playground”), the demo you see really does work the way you see it. And indeed you can run it yourself now in the playground.

Modular is a fairly small startup that’s only a year old, and only one part of the company is working on the Mojo language. Mojo development was only started recently. It’s a small team, working for a short time, so how have they done so much?

The key is that Mojo builds on some really powerful foundations. Very few software projects I’ve seen spend enough time building the right foundations, and tend to accrue as a result mounds of technical debt. Over time, it becomes harder and harder to add features and fix bugs. In a well designed system, however, every feature is easier to add than the last one, is faster, and has fewer bugs, because the foundations each feature builds upon are getting better and better. Mojo is a well designed system.

At its core is MLIR, which has already been developed for many years, initially kicked off by Chris Lattner at Google. He had recognised what the core foundations for an “AI era programming language” would need, and focused on building them. MLIR was a key piece. Just as LLVM made it dramatically easier for powerful new programming languages to be developed over the last decade (such as Rust, Julia, and Swift, which are all based on LLVM), MLIR provides an even more powerful core to languages that are built on it.

Another key enabler of Mojo’s rapid development is the decision to use Python as the syntax. Developing and iterating on syntax is one of the most error-prone, complex, and controversial parts of the development of a language. By simply outsourcing that to an existing language (which also happens to be the most widely used language today) that whole piece disappears! The relatively small number of new bits of syntax needed on top of Python then largely fit quite naturally, since the base is already in place.

The next step was to create a minimal Pythonic way to call MLIR directly. That wasn’t a big job at all, but it was all that was needed to then create all of Mojo on top of that – and work directly in Mojo for everything else. That meant that the Mojo devs were able to “dog-food” Mojo when writing Mojo, nearly from the very start. Any time they found something didn’t quite work great as they developed Mojo, they could add a needed feature to Mojo itself to make it easier for them to develop the next bit of Mojo!

This is very similar to Julia, which was developed on a minimal LISP-like core that provides the Julia language elements, which are then bound to basic LLVM operations. Nearly everything in Julia is built on top of that, using Julia itself.

I can’t begin to describe all the little (and big!) ideas throughout Mojo’s design and implementation – it’s the result of Chris and his team’s decades of work on compiler and language design and includes all the tricks and hard-won experience from that time – but what I can describe is an amazing result that I saw with my own eyes.

The Modular team internally announced that they’d decided to launch Mojo with a video, including a demo – and they set a date just a few weeks in the future. But at that time Mojo was just the most bare-bones language. There was no usable notebook kernel, hardly any of the Python syntax was implemented, and nothing was optimised. I couldn’t understand how they hoped to implement all this in a matter of weeks – let alone to make it any good! What I saw over this time was astonishing. Every day or two whole new language features were implemented, and as soon as there was enough in place to try running algorithms, generally they’d be at or near state of the art performance right away! I realised that what was happening was that all the foundations were already in place, and that they’d been explicitly designed to build the things that were now under development. So it shouldn’t have been a surprise that everything worked, and worked well – after all, that was the plan all along!

This is a reason to be optimistic about the future of Mojo. Although it’s still early days for this project, my guess, based on what I’ve observed in the last few weeks, is that it’s going to develop faster and further than most of us expect…

Deployment

I’ve left one of the bits I’m most excited about to last: deployment. Currently, if you want to give your cool Python program to a friend, then you’re going to have to tell them to first install Python! Or, you could give them an enormous file that includes the entirety of Python and the libraries you use all packaged up together, which will be extracted and loaded when they run your program.

Because Python is an interpreted language, how your program will behave will depend on the exact version of python that’s installed, what versions of what libraries are present, and how it’s all been configured. In order to avoid this maintenance nightmare, instead the Python community has settled on a couple of options for installing Python applications: environments, which have a separate Python installation for each program; or containers, which have much of an entire operating system set up for each application. Both approaches lead to a lot of confusion and overhead in developing and deploying Python applications.

Compare this to deploying a statically compiled C application: you can literally just make the compiled program available for direct download. It can be just 100k or so in size, and will launch and run quickly.

There is also the approach taken by Go, which isn’t able to generate small applications like C, but instead incorporates a “runtime” into each packaged application. This approach is a compromise between Python and C, still requiring tens of megabytes for a binary, but providing for easier deployment than Python.

As a compiled language, Mojo’s deployment story is basically the same as C. For instance, a program that includes a version of matmul written from scratch is around 100k.

This means that Mojo is far more than a language for AI/ML applications. It’s actually a version of Python that allows us to write fast, small, easily-deployed applications that take advantage of all available cores and accelerators!

Alternatives to Mojo

Mojo is not the only attempt at solving the Python performance and deployment problem. In terms of languages, Julia is perhaps the strongest current alternative. It has many of the benefits of Mojo, and a lot of great projects are already built with it. The Julia folks were kind enough to invite me to give a keynote at their recent conference, and I used that opportunity to describe what I felt were the current shortcomings (and opportunities) for Julia:

As discussed in this video, Julia’s biggest challenge stems from its large runtime, which in turn stems from the decision to use garbage collection in the language. Also, the multi-dispatch approach used in Julia is a fairly unusual choice, which both opens a lot of doors to do cool stuff in the language, but also can make things pretty complicated for devs. (I’m so enthused by this approach that I built a python version of it – but I’m also as a result particularly aware of its limitations!)

In Python, the most prominent current solution is probably Jax, which effectively creates a domain specific language (DSL) using Python. The output of this language is XLA, which is a machine learning compiler that predates MLIR (and is gradually being ported over to MLIR, I believe). Jax inherits the limitations of both Python (e.g the language has no way of representing structs, or allocating memory directly, or creating fast loops) and XLA (which is largely limited to machine learning specific concepts and is primarily targeted to TPUs), but has the huge upside that it doesn’t require a new language or new compiler.

As previously discussed, there’s also the new PyTorch compiler, and also Tensorflow is able to generate XLA code. Personally, I find using Python in this way ultimately unsatisfying. I don’t actually get to use all the power of Python, but have to use a subset that’s compatible with the backend I’m targeting. I can’t easily debug and profile the compiled code, and there’s so much “magic” going on that it’s hard to even know what actually ends up getting executed. I don’t even end up with a standalone binary, but instead have to use special runtimes and deal with complex APIs. (I’m not alone here – everyone I know that has used PyTorch or Tensorflow for targeting edge devices or optimised serving infrastructure has described it as being one of the most complex and frustrating tasks they’ve attempted! And I’m not sure I even know anyone that’s actually completed either of these things using Jax.)

Another interesting direction for Python is Numba and Cython. I’m a big fan of these projects and have used both in my teaching and software development. Numba uses a special decorator to cause a python function to be compiled into optimised machine code using LLVM. Cython is similar, but also provides a Python-like language which has some of the features of Mojo, and converts this Python dialect into C, which is then compiled. Neither language solves the deployment challenge, but they can help a lot with the performance problem.

Neither is able to target a range of accelerators with generic cross-platform code, although Numba does provide a very useful way to write CUDA code (and so allows NVIDIA GPUs to be targeted).

I’m really grateful Numba and Cython exist, and have personally gotten a lot out of them. However they’re not at all the same as using a complete language and compiler that generates standalone binaries. They’re bandaid solutions for Python’s performance problems, and are fine for situations where that’s all you need.

But I’d much prefer to use a language that’s as elegant as Python and as fast as expert-written C, allows me to use one language to write everything from the application server, to the model architecture and the installer too, and lets me debug and profile my code directly in the language in which I wrote it.

How would you like a language like that?