Not everybody you disagree with on social media is a bot, however varied types of social media manipulation are certainly used to unfold false narratives, affect democratic processes and have an effect on inventory costs.

In 2019, the worldwide value of unhealthy actors on the web was conservatively estimated at $78 billion. Within the meantime, misinformation methods have saved evolving: Detecting them has been to date a reactive affair, with malicious actors all the time being one step forward.

Alexander Nwala, a William & Mary assistant professor of knowledge science, goals to deal with these types of abuse proactively. With colleagues on the Indiana College Observatory on Social Media, he has not too long ago revealed a paper in EPJ Knowledge Science to introduce BLOC, a common language framework for modeling social media behaviors.

“The principle concept behind this framework is to not goal a particular conduct, however as a substitute present a language that may describe behaviors,” stated Nwala.

Automated bots mimicking human actions have grow to be extra refined over time. Inauthentic coordinated conduct represents one other frequent deception, manifested by means of actions that will not look suspicious on the particular person account stage, however are literally a part of a method involving a number of accounts.

Nevertheless, not all automated or coordinated conduct is essentially malicious. BLOC doesn’t classify “good” or “unhealthy” actions however provides researchers a language to explain social media behaviors—primarily based on which probably malicious actions will be extra simply recognized.

A user-friendly software to research suspicious account conduct is within the works at William & Mary. Ian MacDonald ’25, technical director of the W&M undergraduate-led DisinfoLab, is constructing a BLOC-based web site that will be accessed by researchers, journalists and most people.

Checking for automation and coordination

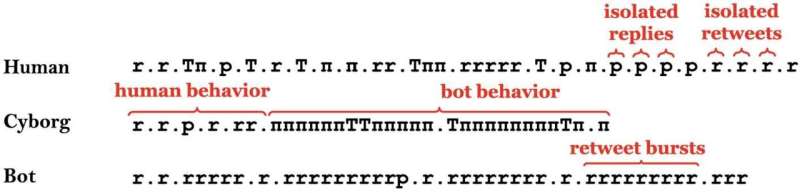

The method, Nwala defined, begins with sampling posts from a given social media account inside a particular timeframe and encoding info utilizing particular alphabets.

BLOC, which stands for “Behavioral Languages for On-line Characterization,” depends on motion and content material alphabets to symbolize person conduct in a method that may be simply tailored to completely different social media platforms.

For example, a string like “TpπR” signifies a sequence of 4 person actions: particularly, a broadcast put up, a reply to a non-friend after which to themselves and a repost of a good friend’s message.

Utilizing the content material alphabet, the identical set of actions will be characterised as “(t)(EEH)(UM)(m)” if the person’s posts respectively comprise textual content, two pictures and a hashtag, a hyperlink and a point out to a good friend and a point out of a non-friend.

The BLOC strings obtained are then tokenized into phrases which might symbolize completely different behaviors. “As soon as we have now these phrases, we construct what we name vectors, mathematical representations of those phrases,” stated Nwala. “So we’ll have varied BLOC phrases after which the variety of instances a person expressed the phrase or conduct.”

As soon as vectors are obtained, information is run by means of a machine studying algorithm educated to determine patterns distinguishing between completely different lessons of customers (e.g., machines and people).

Human and bot-like behaviors are on the reverse ends of a spectrum: In between, there are “cyborg-like” accounts oscillating between these two.

“We create fashions which seize machine and human conduct, after which we discover out whether or not unknown accounts are nearer to people, or to machines,” stated Nwala.

Utilizing the BLOC framework doesn’t merely facilitate bot detection, equaling or outperforming present detection strategies; it additionally permits the identification of similarities between human-led accounts. Nwala identified that BLOC had additionally been utilized to detect coordinated inauthentic accounts participating in info operations from nations that tried to affect elections within the U.S. and the West.

“Similarity is a really helpful metric,” he stated. “If two accounts are doing virtually the identical factor, you may examine their behaviors utilizing BLOC to see if maybe they’re managed by the identical particular person after which examine their conduct additional.”

BLOC is to date distinctive in addressing completely different types of manipulation and is well-poised to survive platform modifications that may make widespread detection instruments out of date.

“Additionally, if a brand new type of conduct arises that we need to research, we needn’t begin from scratch,” stated Nwala. “We are able to simply use BLOC to review that conduct and probably detect it.”

Past on-line unhealthy actors

As Nwala factors out to college students in his class on Net Science—the science of decentralized info buildings—learning internet instruments and applied sciences must keep in mind social, cultural and psychological dimensions.

“As we work together with applied sciences, all of those forces come collectively,” he stated.

Nwala advised potential future functions of BLOC in areas similar to psychological well being, because the framework helps the research of behavioral shifts in social media actions.

Analysis work on social media, nevertheless, has been not too long ago restricted by the restrictions imposed by social media platforms on utility programming interfaces.

“Analysis like this was solely attainable due to the supply of APIs to gather massive quantities of knowledge,” stated Nwala. “Manipulators will be capable to afford no matter it takes to proceed their behaviors, however researchers on the opposite aspect will not.”

In accordance with Nwala, such limitations don’t solely have an effect on researchers, but additionally the society at massive as these research assist increase consciousness of social media manipulation and contribute to efficient policymaking.

“Simply as there’s been this regular shout about how the sluggish decline of native information media impacts the democratic course of, I believe this rises as much as that stage,” he stated. “The power of excellent religion researchers to gather and analyze social media information at a big scale is a public good that wants to not be restricted.”

Extra info:

Alexander C. Nwala et al, A language framework for modeling social media account conduct, EPJ Knowledge Science (2023). DOI: 10.1140/epjds/s13688-023-00410-9

William & Mary

Quotation:

Modeling social media behaviors to fight misinformation: A common language framework (2023, September 15)

retrieved 15 September 2023

from

This doc is topic to copyright. Aside from any honest dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is offered for info functions solely.