The idea of Depthwise Separable Convolutions (DSC) was first proposed by Laurent Sifre of their PhD thesis titled Rigid-Motion Scattering For Image Classification. Since then, they’ve been used efficiently in varied well-liked deep convolutional networks comparable to XceptionNet and MobileNet.

The principle distinction between a daily convolution, and a DSC is {that a} DSC consists of two convolutions as described under:

- A depthwise grouped convolution, the place the variety of enter channels m is the same as the variety of output channels such that every output channel is affected solely by a single enter channel. In PyTorch, that is referred to as a “grouped” convolution. You’ll be able to learn extra about grouped convolutions in PyTorch here.

- A pointwise convolution (filter measurement=1), which operates like a daily convolution such that every of the n filters operates on all m enter channels to provide a single output worth.

Let’s carry out the identical train that we did for normal convolutions for DSCs and compute the variety of trainable parameters and computations.

Analysis Of Trainable Parameters: The “grouped” convolutions have m filters, every of which has dₖ x dₖ learnable parameters which produces m output channels. This ends in a complete of m x dₖ x dₖ learnable parameters. The pointwise convolution has n filters of measurement m x 1 x 1 which provides as much as n x m x 1 x 1 learnable parameters. Let’s take a look at the PyTorch code under to validate our understanding.

class DepthwiseSeparableConv(nn.Sequential):

def __init__(self, chin, chout, dk):

tremendous().__init__(

# Depthwise convolution

nn.Conv2d(chin, chin, kernel_size=dk, stride=1, padding=dk-2, bias=False, teams=chin),

# Pointwise convolution

nn.Conv2d(chin, chout, kernel_size=1, bias=False),

)conv2 = DepthwiseSeparableConv(chin=m, chout=n, dk=dk)

print(f"Anticipated variety of parameters: {m * dk * dk + m * 1 * 1 * n}")

print(f"Precise variety of parameters: {num_parameters(conv2)}")

Which can print.

Anticipated variety of parameters: 656

Precise variety of parameters: 656

We are able to see that the DSC model has roughly 7x much less parameters. Subsequent, let’s focus our consideration on the computation prices for a DSC layer.

Analysis Of Computational Price: Let’s assume our enter has spatial dimensions m x h x w. Within the grouped convolution phase of DSC, we’ve got m filters, every with measurement dₖ x dₖ. A filter is utilized to its corresponding enter channel ensuing within the phase price of m x dₖ x dₖ x h x w. For the pointwise convolution, we apply n filters of measurement m x 1 x 1 to provide n output channels. This ends in the phase price of n x m x 1 x 1 x h x w. We have to add up the prices of the grouped and pointwise operations to compute the whole price. Let’s go forward and validate this utilizing the torchinfo PyTorch bundle.

print(f"Anticipated complete multiplies: {m * dk * dk * h * w + m * 1 * 1 * h * w * n}")

s2 = abstract(conv2, input_size=(1, m, h, w))

print(f"Precise multiplies: {s2.total_mult_adds}")

print(s2)

Which can print.

Anticipated complete multiplies: 10747904

Precise multiplies: 10747904

==========================================================================================

Layer (kind:depth-idx) Output Form Param #

==========================================================================================

DepthwiseSeparableConv [1, 32, 128, 128] --

├─Conv2d: 1-1 [1, 16, 128, 128] 144

├─Conv2d: 1-2 [1, 32, 128, 128] 512

==========================================================================================

Whole params: 656

Trainable params: 656

Non-trainable params: 0

Whole mult-adds (M): 10.75

==========================================================================================

Enter measurement (MB): 1.05

Ahead/backward move measurement (MB): 6.29

Params measurement (MB): 0.00

Estimated Whole Measurement (MB): 7.34

==========================================================================================

Let’s examine the sizes and prices of each the convolutions for a couple of examples to achieve some instinct.

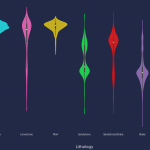

Measurement and Price comparability for normal and depthwise separable convolutions

To match the dimensions and price of standard and depthwise separable convolution, we’ll assume an enter measurement of 128 x 128 to the community, a kernel measurement of 3 x 3, and a community that progressively halves the spatial dimensions and doubles the variety of channel dimensions. We assume a single Second-conv layer at each step, however in follow, there might be extra.

You’ll be able to see that on common each the dimensions and computational price of DSC is about 11% to 12% of the price of common convolutions for the configuration talked about above.

Now that we’ve got developed an excellent understanding of the varieties of convolutions and their relative prices, you have to be questioning if there’s any draw back of utilizing DSCs. Every little thing we’ve seen up to now appears to counsel that they’re higher in each means! Effectively, we haven’t but thought of an necessary facet which is the impression they’ve on the accuracy of our mannequin. Let’s dive into it by way of an experiment under.