Visible language is the type of communication that depends on pictorial symbols outdoors of textual content to convey data. It’s ubiquitous in our digital life within the type of iconography, infographics, tables, plots, and charts, extending to the true world in road indicators, comedian books, meals labels, and many others. For that motive, having computer systems higher perceive this sort of media can assist with scientific communication and discovery, accessibility, and information transparency.

Whereas pc imaginative and prescient fashions have made great progress utilizing learning-based options for the reason that creation of ImageNet, the main target has been on pure pictures, the place all kinds of duties, similar to classification, visual question answering (VQA), captioning, detection and segmentation, have been outlined, studied and in some circumstances superior to achieve human efficiency. Nevertheless, visible language has not garnered an analogous stage of consideration, probably due to the shortage of large-scale coaching units on this house. However over the previous few years, new tutorial datasets have been created with the purpose of evaluating query answering programs on visible language pictures, like PlotQA, InfographicsVQA, and ChartQA.

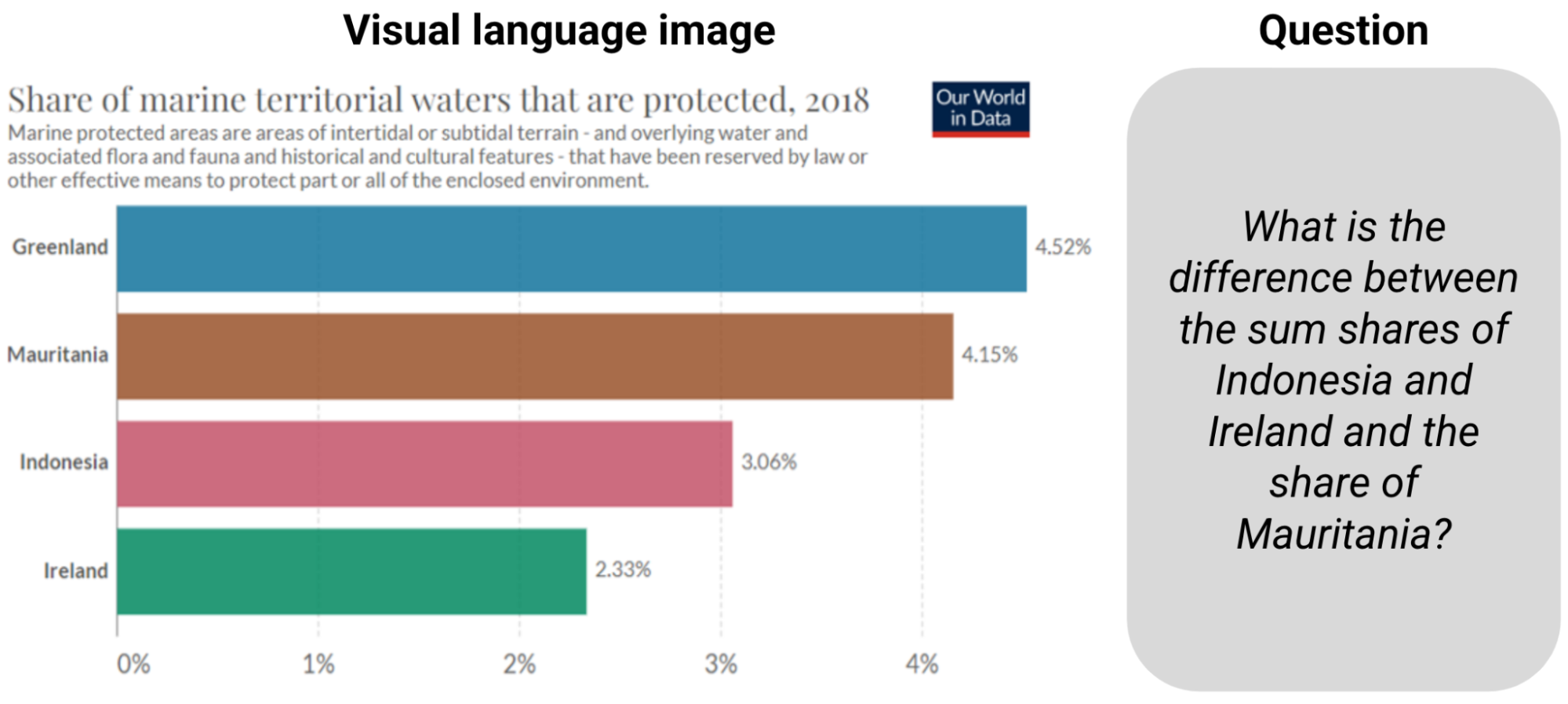

|

| Instance from ChartQA. Answering the query requires studying the knowledge and computing the sum and the distinction. |

Present fashions constructed for these duties relied on integrating optical character recognition (OCR) data and their coordinates into bigger pipelines however the course of is error susceptible, sluggish, and generalizes poorly. The prevalence of those strategies was as a result of present end-to-end pc imaginative and prescient fashions primarily based on convolutional neural networks (CNNs) or transformers pre-trained on pure pictures couldn’t be simply tailored to visible language. However present fashions are ill-prepared for the challenges in answering questions on charts, together with studying the relative top of bars or the angle of slices in pie charts, understanding axis scales, appropriately mapping pictograms with their legend values with colours, sizes and textures, and eventually performing numerical operations with the extracted numbers.

In gentle of those challenges, we suggest “MatCha: Enhancing Visual Language Pretraining with Math Reasoning and Chart Derendering”. MatCha, which stands for math and charts, is a pixels-to-text basis mannequin (a pre-trained mannequin with built-in inductive biases that may be fine-tuned for a number of purposes) skilled on two complementary duties: (a) chart de-rendering and (b) math reasoning. In chart de-rendering, given a plot or chart, the image-to-text mannequin is required to generate its underlying information desk or the code used to render it. For math reasoning pre-training, we decide textual numerical reasoning datasets and render the enter into pictures, which the image-to-text mannequin must decode for solutions. We additionally suggest “DePlot: One-shot visual language reasoning by plot-to-table translation”, a mannequin constructed on high of MatCha for one-shot reasoning on charts through translation to tables. With these strategies we surpass the earlier state-of-the-art in ChartQA by greater than 20% and match the very best summarization programs which have 1000 occasions extra parameters. Each papers will probably be introduced at ACL2023.

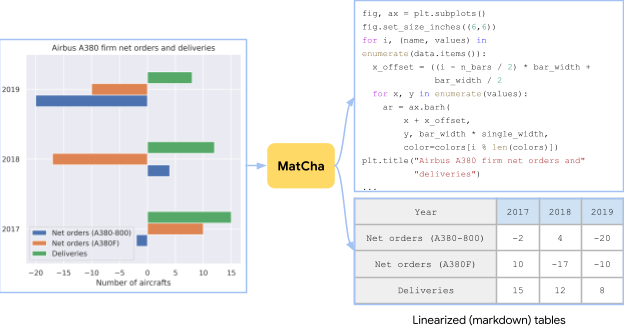

Chart de-rendering

Plots and charts are normally generated by an underlying information desk and a chunk of code. The code defines the general format of the determine (e.g., sort, course, coloration/form scheme) and the underlying information desk establishes the precise numbers and their groupings. Each the information and code are despatched to a compiler/rendering engine to create the ultimate picture. To grasp a chart, one wants to find the visible patterns within the picture and successfully parse and group them to extract the important thing data. Reversing the plot rendering course of calls for all such capabilities and might thus function a great pre-training job.

|

| A chart created from a desk within the Airbus A380 Wikipedia page utilizing random plotting choices. The pre-training job for MatCha consists of recovering the supply desk or the supply code from the picture. |

In observe, it’s difficult to concurrently get hold of charts, their underlying information tables, and their rendering code. To gather enough pre-training information, we independently accumulate [chart, code] and [chart, table] pairs. For [chart, code], we crawl all GitHub IPython notebooks with applicable licenses and extract blocks with figures. A determine and the code block proper earlier than it are saved as a [chart, code] pair. For [chart, table] pairs, we explored two sources. For the primary supply, artificial information, we manually write code to transform web-crawled Wikipedia tables from the TaPas codebase to charts. We sampled from and mixed a number of plotting choices relying on the column sorts. As well as, we additionally add [chart, table] pairs generated in PlotQA to diversify the pre-training corpus. The second supply is web-crawled [chart, table] pairs. We straight use the [chart, table] pairs crawled within the ChartQA coaching set, containing round 20k pairs in whole from 4 web sites: Statista, Pew, Our World in Data, and OECD.

Math reasoning

We incorporate numerical reasoning information into MatCha by studying math reasoning expertise from textual math datasets. We use two present textual math reasoning datasets, MATH and DROP for pre-training. MATH is synthetically created, containing two million coaching examples per module (sort) of questions. DROP is a reading-comprehension–fashion QA dataset the place the enter is a paragraph context and a query.

To resolve questions in DROP, the mannequin must learn the paragraph, extract related numbers and carry out numerical computation. We discovered each datasets to be complementary. MATH comprises a lot of questions throughout totally different classes, which helps us establish math operations wanted to explicitly inject into the mannequin. DROP’s reading-comprehension format resembles the everyday QA format whereby fashions concurrently carry out data extraction and reasoning. In observe, we render inputs of each datasets into pictures. The mannequin is skilled to decode the reply.

|

| To enhance the maths reasoning expertise of MatCha we incorporate examples from MATH and DROP into the pre-training goal, by rendering the enter textual content as pictures. |

Finish-to-end outcomes

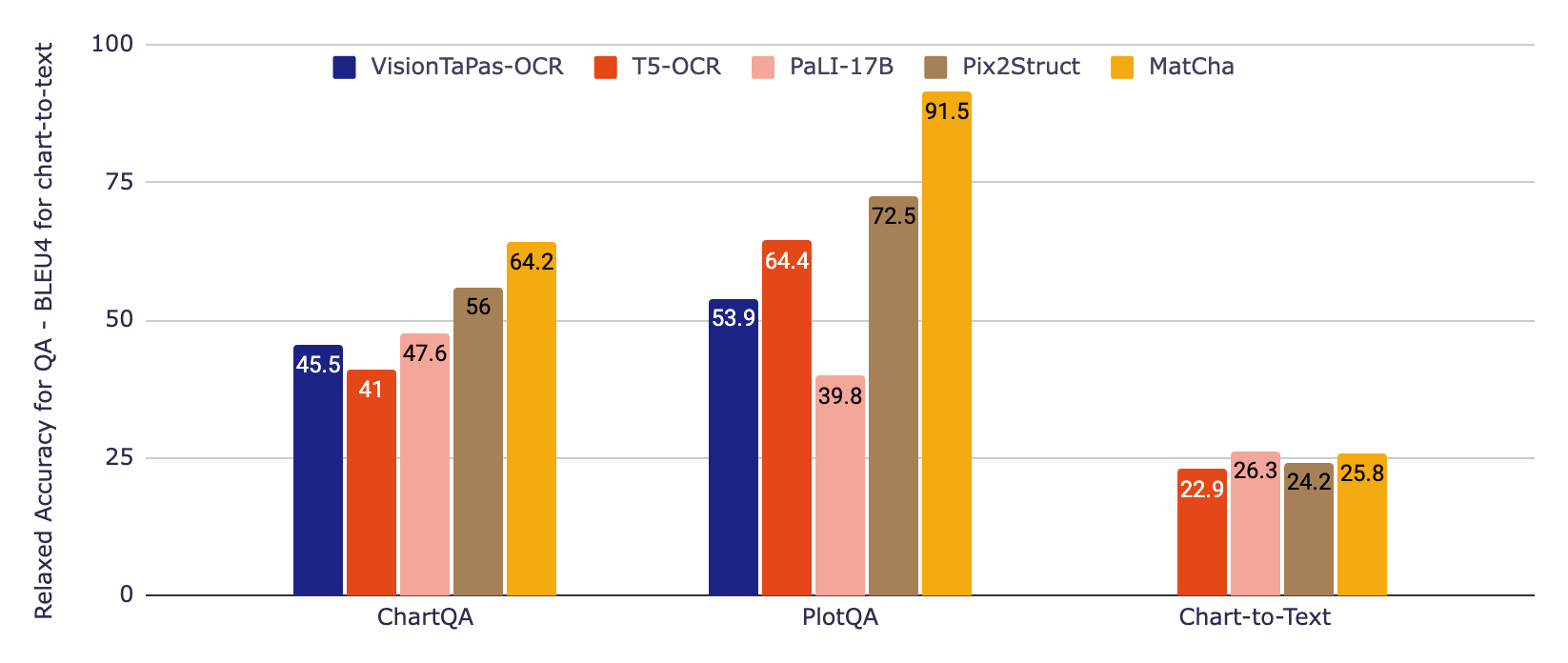

We use a Pix2Struct mannequin spine, which is an image-to-text transformer tailor-made for web site understanding, and pre-train it with the 2 duties described above. We reveal the strengths of MatCha by fine-tuning it on a number of visible language duties — duties involving charts and plots for query answering and summarization the place no entry to the underlying desk is feasible. MatCha surpasses earlier fashions’ efficiency by a big margin and in addition outperforms the earlier state-of-the-art, which assumes entry to underlying tables.

Within the determine beneath, we first consider two baseline fashions that incorporate data from an OCR pipeline, which till lately was the usual method for working with charts. The primary is predicated on T5, the second on VisionTaPas. We additionally evaluate towards PaLI-17B, which is a big (~1000 occasions bigger than the opposite fashions) picture plus text-to-text transformer skilled on a various set of duties however with restricted capabilities for studying textual content and different types of visible language. Lastly, we report the Pix2Struct and MatCha mannequin outcomes.

For QA datasets, we use the official relaxed accuracy metric that enables for small relative errors in numerical outputs. For chart-to-text summarization, we report BLEU scores. MatCha achieves noticeably improved outcomes in comparison with baselines for query answering, and comparable outcomes to PaLI in summarization, the place massive dimension and in depth lengthy textual content/captioning technology pre-training are advantageous for this type of long-form textual content technology.

Derendering plus massive language mannequin chains

Whereas extraordinarily performant for his or her variety of parameters, notably on extractive duties, we noticed that fine-tuned MatCha fashions may nonetheless battle with end-to-end complicated reasoning (e.g., mathematical operations involving massive numbers or a number of steps). Thus, we additionally suggest a two-step methodology to sort out this: 1) a mannequin reads a chart, then outputs the underlying desk, 2) a big language mannequin (LLM) reads this output after which tries to reply the query solely primarily based on the textual enter.

For the primary mannequin, we fine-tuned MatCha solely on the chart-to-table job, rising the output sequence size to ensure it may recuperate all or a lot of the data within the chart. DePlot is the ensuing mannequin. Within the second stage, any LLM (similar to FlanPaLM or Codex) can be utilized for the duty, and we will depend on the usual strategies to extend efficiency on LLMs, for instance chain-of-thought and self-consistency. We additionally experimented with program-of-thoughts the place the mannequin produces executable Python code to dump complicated computations.

|

| An illustration of the DePlot+LLM methodology. This can be a actual instance utilizing FlanPaLM and Codex. The blue packing containers are enter to the LLM and the pink packing containers include the reply generated by the LLMs. We spotlight a few of the key reasoning steps in every reply. |

As proven within the instance above, the DePlot mannequin together with LLMs outperforms fine-tuned fashions by a major margin, particularly so within the human-sourced portion of ChartQA, the place the questions are extra pure however demand harder reasoning. Moreover, DePlot+LLM can accomplish that with out entry to any coaching information.

Now we have launched the brand new fashions and code at our GitHub repo, the place you possibly can attempt it out your self in colab. Checkout the papers for MatCha and DePlot for extra particulars on the experimental outcomes. We hope that our outcomes can profit the analysis neighborhood and make the knowledge in charts and plots extra accessible to everybody.

Acknowledgements

This work was carried out by Fangyu Liu, Julian Martin Eisenschlos, Francesco Piccinno, Syrine Krichene, Chenxi Pang, Kenton Lee, Mandar Joshi, Wenhu Chen and Yasemin Altun from our Language Team as a part of Fangyu’s internship venture. Nigel Collier from Cambridge additionally was a collaborator. We want to thank Joshua Howland, Alex Polozov, Shrestha Basu Mallick, Massimo Nicosia and William Cohen for his or her invaluable feedback and solutions.