Anamorph, a new filmmaking and technology company, announced its launch today. The startup, founded by filmmaker Gary Hustwit and digital artist Brendan Dawes, wants to reshape the cinematic experience with its proprietary generative technology that can create films that are different every time they’re shown.

Anamorph revealed its innovative technology at the 2024 Sundance Film Festival when it debuted its first documentary, “Eno,” which follows English musician, producer and visual artist Brian Eno, who has worked with legends David Bowie, U2, Coldplay, Grace Jones, Talking Heads and many others. His primary focus is experimenting with generative music software.

“Brian seemed like the perfect candidate for [using Anamorph’s software] since he’s always been about pushing for technology and how it can be used in art and music,” Hustwit tells TechCrunch.

Each time “Eno” was shown at Sundance, the generative media platform selected scenes from over 500 hours of restored archival footage and interviews, as well as animated visuals and music. Anamorph’s system is able to generate billions of potential sequences, resulting in a unique viewing experience for each audience.

Admittedly, we were skeptical at first. Our biggest question was: will the order of scenes even make sense? But as Hustwit points out, the purpose of the generative system isn’t to deliver films with a “chronological arc.”

“You can still have an engaging narrative arc in a film, sort of what we expect when we see a [normal] documentary… even if the scenes, footage, music, and the sequences change, we can still get an engaging, cohesive story. It helps, in this case, that it’s all about one person,” he notes. “Your brain is trying to make the connections and figure out the story. And that story changes according to how you receive the information and how it’s paced out.”

It also helps that the first and last scenes of “Eno” are always the same. Plus, there are certain scenes pinned to the same timeslot in each version, including the scene where Eno discusses generative art.

“We thought that was probably a good scene that everybody should see,” says Hustwit.

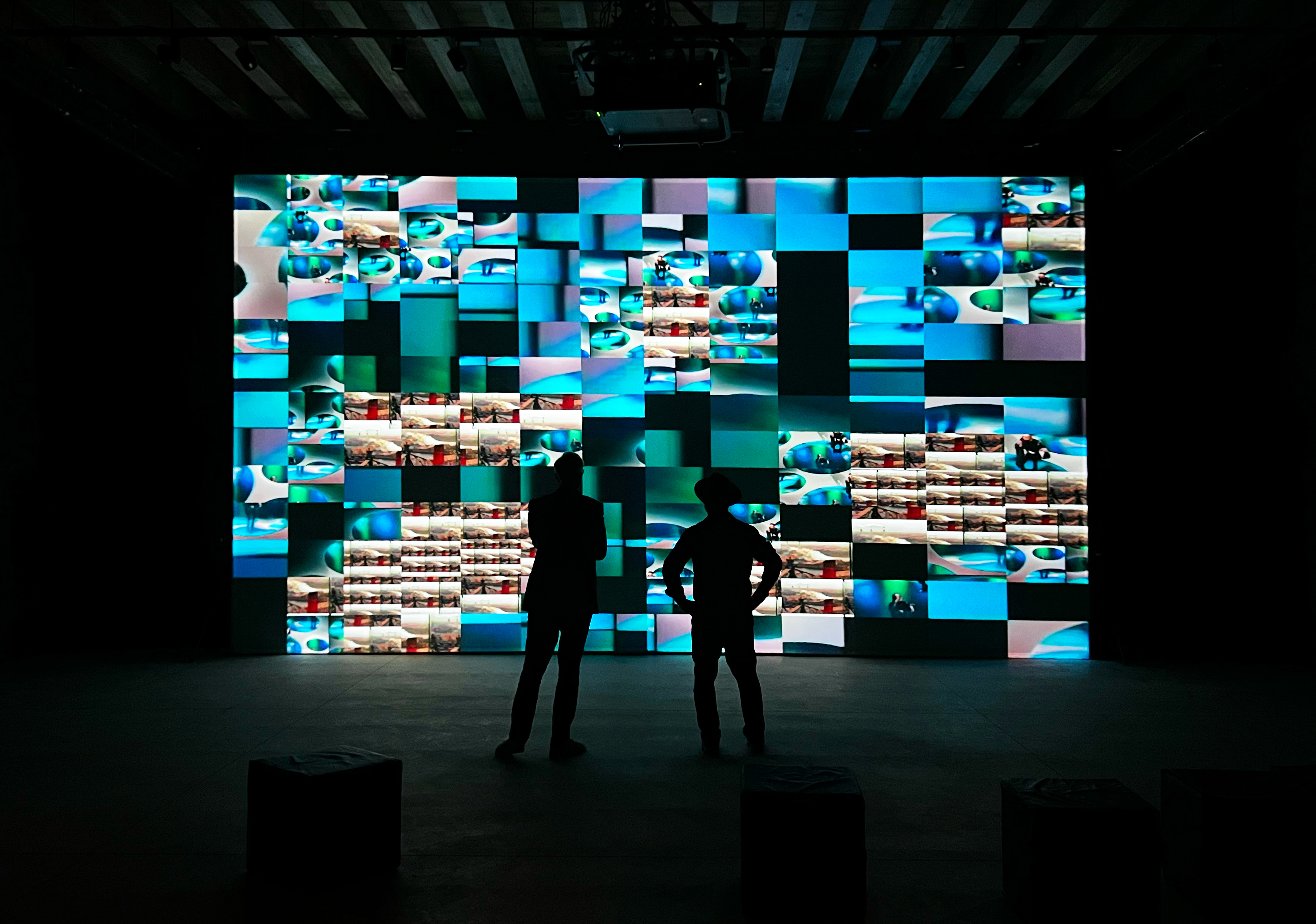

Anamorph used HD files at Sundance, but its software can also create the film live at a screening, which the startup showcased during an installation at the Venice Biennale in October 2023.

“We just let the generative platform run wild with Eno’s entire music catalog and all the footage and no rules. [The software] made a film that was 168 hours long and not a loop. It was generating an original film that didn’t repeat for 168 hours. It could have gone on longer, but the exhibition was only open for a week,” Hustwit shares.

There were only six versions shown at Sundance. The company has since refined the software and added more footage, so “Eno” will continue to evolve.

Additional screenings will be shown this spring and summer across 50 cities.

Image Credits: Gary Hustwit/Sundance Institute.

As you can imagine, a generative platform that has the capacity to make different variations using hundreds of hours of footage isn’t just built in one day or even one year. Anamorph spent five years building its software from scratch, combining patent-pending techniques and the team’s own knowledge of storytelling. The company says it’s not trained on anyone else’s data, IP, or other films.

“The main challenge was creating a system that could process potentially hundreds of 4K video files, each with its own 5.1 audio tracks, in real-time,” Dawes tells us. “The platform selects and sequences edited scene files, but it also builds its own pure generative scenes and transitions, creating video and original 5.1 audio elements dynamically. The platform also needed to be robust in a live situation, it wasn’t an option to have it crash. So, we did a crazy amount of testing. We can create a unique version of a film live in a theater, or we can render out a ProRes file with its own 5.1 audio mix and make a DCP from that.”

Notably, Dawes says the system can make over 52 quintillion variations. (How insane is that?)

He also stresses, “This is a generative system, not generative AI. So I just need to make that clear, because pretty much everything that’s been said about [“Eno”] uses the word AI.”

The one problem holding Anamorph back from bringing its system to the masses is that there’s no existing streaming platform that can support this type of tech. However, the company says it wants to develop the capabilities in-house for major streamers to use.

“I think the main constraint is that the current streaming networks aren’t equipped to dynamically generate unique video files and stream them to thousands of viewers so that each viewer is getting their own version of a movie. When we premiered ‘Eno’ at Sundance, all the big streaming companies loved it, but they also admitted that their systems can’t handle the tech involved… These streamers need to differentiate, and I think enabling the films and shows they’re releasing with generative technology is a way to do that,” says Hustwit.

It’ll likely take years before streaming services adapt to the technology. Until that happens, Anamorph is sticking to live events and theatrical releases.

“Something that the theater industry badly needs right now is a reason to get people to come in, and if there is a uniqueness about the live cinema experience, that’s one way that can be achieved,” Hustwit adds.

Image Credits: Anamorph

In addition to documentaries, the company is exploring other projects that could utilize generative platforms, including art displays and even Blockbuster films. Advertising agencies have also expressed their interest, Hustwit reveals, with one company wanting to make 10,000 versions of a one-minute commercial.

It’s hard to imagine that a TV series following an episodic structure would ever make sense in this type of format, especially if B and C storylines are incorporated. Unlike Netflix’s choose-your-own-adventure movie “Black Mirror: Bandersnatch,” viewers don’t get to decide which scenes they want to watch, nor will they be able to rewatch a version.

“It does require a little bit more active participation of the viewer to notice the differences if they rewatch it again, and get excited about discovering what wasn’t there,” Hustwit says.

All in all, this idea won’t be for everyone, but it certainly offers an entertaining and new experience that no one has seen before.

Now that Anamorph has officially launched, it’s open to consultations with filmmakers, content creators, studios, streaming companies, and more. Rather than make its tools publicly accessible, the company wants to collaborate on projects so it can “consider the source material and the overall story goals,” says Hustwit. He added that Anamorph is currently in discussions with a dozen or more companies.

Additionally, the cost of each project will vary.

“We could make a Marvel movie that changes every time it plays — which would be amazing — and the costs of that would be more than a small video art project. But we’re interested in collaborating on projects in both those ranges. Our main goal is to get the idea out about this new kind of cinema and hook up with great collaborators to help explore this idea,” Hustwit says.