tl;dr

Jeremy Howard (founding CEO, previously co-founder of Kaggle and fast.ai) and Eric Ries (founding director, previously creator of Lean Startup and the Long-Term Stock Exchange) today launched Answer.AI, a new kind of AI R&D lab which creates practical end-user products based on foundational research breakthroughs. The creation of Answer.AI is supported by an investment of USD10m from Decibel VC. Answer.AI will be a fully-remote team of deep-tech generalists—the world’s very best, regardless of where they live, what school they went to, or any other meaningless surface feature.

A new R&D lab

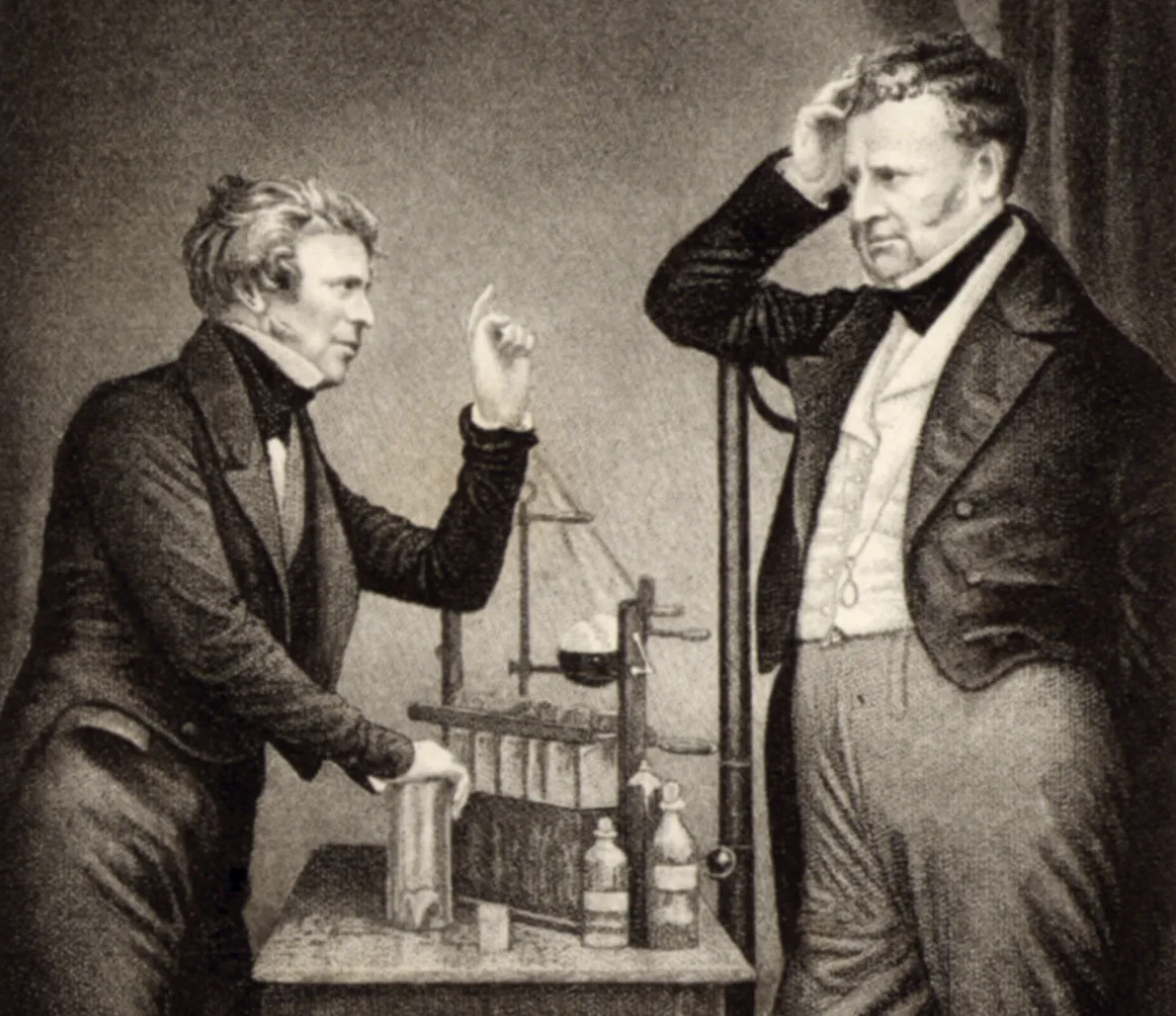

In 1831 Michael Faraday showed the world how to harness electricity. Suddenly there was, quite literally, a new source of power in the world. He later found the basis of the unification of light and magnetism, and knew he was onto something big:

“I happen to have discovered a direct relation between magnetism and light, also electricity and light, and the field it opens is so large and I think rich.” Michael Faraday; letter to Christian Schoenbein

But it wasn’t quite clear how to harness this power. What kinds of products and services could now be created that couldn’t before? What could now be made far cheaper, more efficient, and more accessible? One man set out to understand this, and in 1876 he put together a new kind of R&D lab, which he called the “Invention Lab”: a lab that would figure out the fundamental research needed to tame electricity, and the applied development needed to make it useful in practice.

You might have heard of the man: his name was Thomas Edison. And the organization he created turned into a company you would know: General Electric.

Today, we find ourselves in a similar situation. There’s a new source of power in the world—artificial intelligence. And, like before, it’s not quite clear how to harness this power. Where are all the AI-powered products and services that make our lives and work dramatically easier and more pleasant?

To create these AI-powered products and services, we’ve created a new R&D lab, called Answer.AI. Answer.AI will figure out the fundamental research needed to tame AI, and the development path needed to make it useful in practice.

An iterative path to harnessing AI

Harnessing AI requires not just low-level computer science and mathematical research, but also deep thinking about what practical applications can take advantage of this new power. The “D” in “R&D” is critical: it’s only by considering the development of practical applications that the correct research directions can be targeted.

That’s why Answer.AI is built on the work of experts in both research and development. Co-founders Jeremy Howard (that’s me!) and Eric Ries have created pioneering ideas in each of these areas. I co-founded fast.ai, where I have worked for the last 7 years on research into how to best make AI more accessible, particularly through transfer learning and fine tuning. I’ve been working with machine learning for over 30 years, including creating the ULMFiT method of fine-tuning large language models which is used as the basis of all popular language models today, including OpenAI’s ChatGPT and Google’s Gemini. I have developed the longest running online courses on Deep Learning in the world, in which I show students how to start with simple models and then iteratively improve them all the way to the state of the art.

I’ve known Eric for years, and there’s no-one I trust or respect more, which is why I asked him to serve as the founding director of Answer.AI. Eric has dedicated the last 10 years of his life to improving how companies operate, serve customers, and are governed. He is the creator of the Lean Startup movement, which is the basis of how most startups build products and scale their organizations. His work focuses on development: how can organizations go from an idea to a sustainable, mission-driven, and profitable product in practice. One of his key insights was to create and then iteratively improve a Minimal Viable Product (MVP).

I asked Eric for his thoughts on Answer.AI’s unique approach to R&D, and he summarised better than I ever could, so I’ll just quote his reply here directly:

“People think that the order is research→development, and that therefore an R&D lab does “R” and then “D”. That is, the research informs the development, and so being practical means having researchers and developers. But this is wrong, and leads to a lot of bad research, because development should inform research and vice-versa. So having development goals is a way to do more effective research, if you set that out as your north star.”

Eric is also an expert on governance and how companies should be led in order to align profit and increased human flourishing. He created the Long-Term Stock Exchange (LTSE), the first fundamentally new US Stock Exchange in over 50 years. LTSE mandates that listed companies and likeminded investors work towards long-term value, rather than just short-term profit maximization. Eric serves as the Chairman of LTSE, meaning he is not only up to date on the right long-term governance frameworks, but on the cutting edge of inventing new systems.

It will take years for Answer.AI to harness AI’s full potential, which requires the kind of strategic foresight and long-term tenacity which is hard to maintain in today’s business environment. Eric has been writing a book on exactly this topic, and his view is that the key foundation is to have the right corporate governance in place. He’s helped me ensure that Answer.AI will always reflect my vision and strategy for harnessing AI. We’re doing this by by setting up a for-profit organization that focuses on long-term impact. After all, over a long-enough timeframe, maximizing shareholder value and maximizing societal benefits are entirely aligned.

Whilst Eric and I bring very different (and complementary) skills and experiences to the table, we bring the same basic idea of how to solve really hard problems: solve smaller easier problems in simple ways first, and create a ladder where each rung is a useful step of itself, whilst also getting a little closer to the end goal.

Our research platform

Companies like OpenAI and Anthropic have been working on developing Artificial General Intelligence (AGI). And they’ve done an astonishing job of that — we’re now at the point where experts in the field are claiming that “Artificial General Intelligence Is Already Here”.

At Answer.AI we are not working on building AGI. Instead, our interest is in effectively using the models that already exist. Figuring out what practically useful applications can be built on top of the foundation models that already exist is a huge undertaking, and I believe it is receiving insufficient attention.

My view is that the right way to build Answer.AI’s R&D capabilities is by bringing together a very small number of curious, enthusiastic, technically brilliant generalists. Having huge teams of specialists creates an enormous amount of organizational friction and complexity. But with the help of modern AI tools I’ve seen that it’s possible for a single generalist with a strong understanding of the foundations to create effective solutions to challenging problems, using unfamiliar languages, tools, and libraries (indeed I’ve done this myself many times!) I think people will be very surprised to discover what a small team of nimble, creative, open-minded people can accomplish.

At Answer.AI we will be doing genuinely original research into questions such as how to best fine-tune smaller models to make them as practical as possible, and how to reduce the constraints that currently hold back people from using AI more widely. We’re interested in solving things that may be too small for the big labs to care about-—but our view is that it’s the collection of these small things matter a great deal in practice.

This informs how we think about safety. Whilst AI is becoming more and more capable, the dangers to society from poor algorithmic decision making have been with us for years. We believe in learning from these years of experience, and thinking deeply about how to align the applications of models with the needs of people today. At fast.ai three years ago we created a pioneering course on Practical Data Ethics, as well as dedicating a chapter of our book to these issues. We are committed to continuing to work towards ethical and beneficial applications of AI.

From fast.ai to Answer.AI

Rachel Thomas and I realised over seven years ago that deep learning and neural networks were on their way to becoming one of the most important technologies in history, but they were also on their way to being controlled and understood by a tiny exclusive slither of society. We were worried about centralization and control of something so critical, so we founded fast.ai with the mission of making AI more accessible.

We succeeded beyond our wildest dreams, and today fast.ai’s AI courses are the longest-running, and perhaps most loved, in the world. We built the first library to make PyTorch easier to use and more powerful (fastai), built the fastest image model training system in the world (according to the Dawnbench competition), and created the 3-step training methodology now used by all major LLMs (ULMFiT). Everything we have created for the last 7 years was free—fast.ai was an entirely altruistic endeavour in which everything we built was gifted to everybody.

I’m now of the opinion that this is the time for rejuvenation and renewal of our mission. Indeed, the mission of Answer.AI is the same as fast.ai: to make AI more accessible. But the method is different. Answer.AI’s method will be to use AI to create all kinds of products and services that are really valuable and useful in practice. We want to research new ways of building AI products that serve customers that can’t be served by current approaches.

This will allow us to make money, which we can use to expand into more and bigger opportunities, and use to drive down costs through better efficiency, creating a positive feedback loop of more and more value from AI. We’ll be spending all our time looking at how to make the market size bigger, rather than how to increase our share of it. There’s no moat, and we don’t even care! This goes to the heart of our key premise: creating a long-term profitable company, and making a positive impact on society overall, can be entirely aligned goals.

We don’t really know what we’re doing

If you’ve read this far, then I’ll tell you the honest truth: we don’t actually know what we’re doing. Artificial intelligence is a vast and complex topic, and I’m very skeptical of anyone that claims they’ve got it all figured out. Indeed, Faraday felt the same way about electricity—he wasn’t even sure it was going to be of any import:

“I am busy just now again on Electro-Magnetism and think I have got hold of a good thing but can’t say; it may be a weed instead of a fish that after all my labour I may at last pull up.” Faraday 1931 letter to R. Phillips

But it’s OK to be uncertain. Eric and I believe that the best way to develop valuable stuff built on top of modern AI models is to try lots of things, see what works out, and then gradually improve bit by bit from there.

As Faraday said, “A man who is certain he is right is almost sure to be wrong.” Answer.AI is an R&D lab for people who aren’t certain they’re right, but they’ll work damn hard to get it right eventually.

This isn’t really a new kind of R&D lab. Edison did it before, nearly 150 years ago. So I guess the best we can do is to say it’s a new old kind of R&D lab. And if we do as well as GE, then I guess that’ll be pretty good.