Legacy systems weren’t built for AI, making integration a nightmare—missing APIs, outdated infrastructure, and strict security rules slow everything down. Inferable fixes this by letting developers connect AI agents to their existing code with minimal effort. Co-founder Nadeesha Cabral explains how his experience with enterprise systems led to Inferable and why AI-powered automation needs to be built for the real world.

1. What inspired you to create Inferable, and how did your previous experiences shape the platform’s development?

Nadeesha Cabral: We’ve both been working in so many internal legacy systems and the challenge of integration complexity. Systems don’t have the required APIs exposed, they’re poorly maintained, and they’re deployed in private subnets where outbound connections are not allowed. Getting them integrated into the stack, even to do something as simple as calling an API and getting some data out of it, is a pain. Our insight was to see whether LLMs can solve part of this problem – namely, discovery of those internal APIs, problem solution mapping, and orchestration while good engineering solves the other part.

2. How does Inferable simplify building agentic applications compared to traditional frameworks?

Nadeesha Cabral: TL;DR – Developers bring the tools, and we provide a reliable agent runtime.

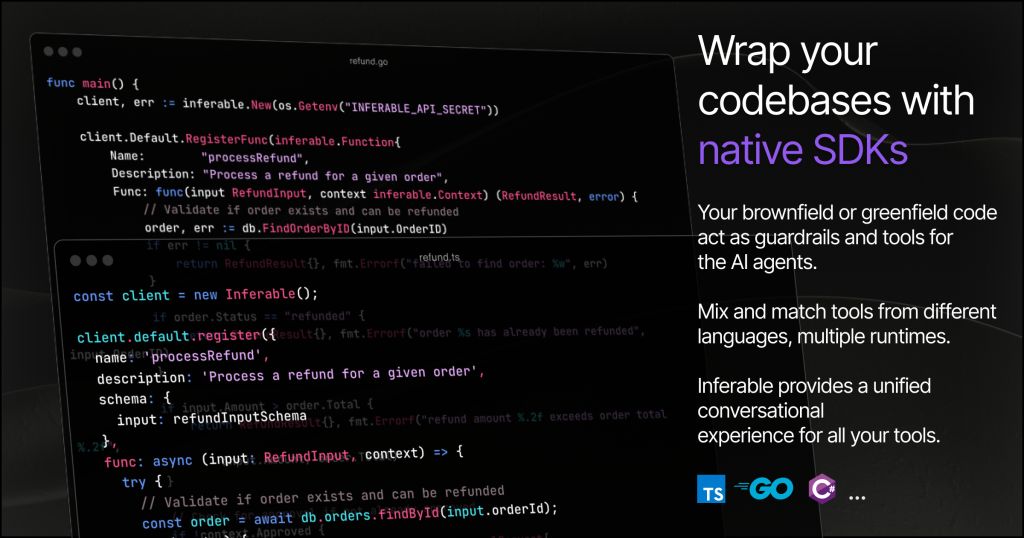

We have a set of SDKs (Node.js / Go / .NET with Java and PHP coming up) that uses existing types in a codebase to dynamically generate tools at run time. We then attach these tools to a pre-built Re-Act (reason and act) agent.

So, with a couple of lines of code, using our SDKs, your existing codebase becomes “AI-orchestrable”.

To do this, we’ve basically broken the problem down into 3 parts: Tools, Agents and Workflows.

Tools are parts of your internal systems that you want the AI to access. These could be functions, API resolvers, or your ORM layer. Then, the agents are probabilistic reasoning engines that use situational context and the available tools to arrive at an answer via the prompt. We provide support for structured prompts by breaking them down into facts, goals and data. The final piece is durable workflows. Workflows allow the agents to pass data between each other and the developers to pipe the output from one agent to another agent or to other side-effects like API calls, database operations, or other workflows.

With these three things, we aim to provide a good DX with sensible defaults to someone building LLM-based automation.

3. What differentiates Inferable from other agent frameworks and AI platforms in the market?

Nadeesha Cabral: We’re intensely focused on internal systems and internal use cases for companies that require a higher degree of data privacy. We integrate the agent runtime + tool orchestration in a vertical manner that makes them highly compatible and more reliable than rolling your own.

We’re also very focused on distributed tools and workflows. Most companies don’t run a single copy of their software. Most modern software is run with multiple replicas separated by network boundaries. In these environments, service discovery is a challenge when you have dozens of tools and need to search through those tools to select the appropriate one. Even if you can find the service, routing the call to the correct tool, load balancing across many replicas, and recovering from tool failures (network failures, machines rolling, pods replacing) is another challenge. Inferable handles all of these concerns for you via our long-polling SDKs.

We’ve also gone to great lengths to enable durable execution for agentic applications in a way that preserves the developer experience of writhing “workflow as code.” Basically, you can string together multiple agents and define a long-running process (something that might execute from a few seconds to weeks or months), pausing and resuming (when a human has to give approval) and execute that with certain reliability guarantees.

4. Inferable supports multiple programming languages. What motivated the focus on Node.js, Golang, and .NET?

Nadeesha Cabral: We’re interested in providing more SDKs to currently underserved languages in AI tooling. Everyone is building for Python and Typescript, but we want to target the long tail. Since we’ve decoupled the execution environment from the orchestration environment (where things execute vs. control plane), our APIs do the heavy lifting—enough for us to ship a good-quality SDK very quickly.

5. How does Inferable ensure data security and compliance, especially for enterprise users?

Nadeesha Cabral: All the tools execute in the user’s own runtime. The agent cannot do something that your codebase doesn’t allow it to. For example, it doesn’t have any access to data your functions don’t expose or your execution runtime.

Workflows, agents and tools all talk to the control plane via long-polling. This means that to use us in an internal network, a developer doesn’t need to expose internal APIs, configure load balancers, proxies, etc.

6. Can you elaborate on the managed agent runtime’s capabilities, especially in solving complex problems step-by-step?

Nadeesha Cabral: The agent that Inferable exposes in a workflow comes in two flavours. One that does multi-step problem solving, and the other that does single-step problem solving. Both have their own advantages. The multi-step problem-solving agent (what we call Reasoning and Action agent) uses an iterative algorithm to make a reasoning-esque model (Like Open AI o1 or Deep Seek R1) out of any non-reasoning model. To achieve this, we make the mode “think out loud” and use that as an input for the next model iteration. To make sure the model doesn’t end up with

infinite loops or doesn’t go into a recursive reasoning loop. We have some guardrails around it.

This and all of our agent runtime capabilities are “managed” in the sense that there’s a control plane instance that keeps track of them, retries failed operations, tracks stalled machines, and provides outputs for telemetry. It also takes care of concerns like model routing, where failed LLM calls are load-balanced and replayed.

7. What are some of the most impactful use cases of Inferable in production environments?

Nadeesha Cabral: We’re seeing strong adoption in several areas:

Complex Multi-Step Workflows that require a lot of reading and writing to internal systems – this is our bread and butter.

These applications are dependent on dev teams to debug issues in production and operations teams to quickly triage issues according to standard SOPs and provide automated resolutions. These workflows are potentially long-running, need to have a high degree of reliability, and need to be implemented with the existing system architecture without putting too much pressure on the developers to learn yet another framework.

Since our managed cloud pricing is usage-based, and we compete on the DX, engineering teams can replicate many of the features that a vertical agent (customer support, data analysis) would, at a fraction of the cost – and own the code and customize it themselves. So, we’re seeing an uptick in companies considering us to build proprietary vertical agents as well.

8. How does Inferable’s observability feature empower developers and enterprises to monitor their workflows effectively?

Nadeesha Cabral: Since all of the execution happens within the user’s own compute, most observability requirements are covered by the user’s own observability set-up. All workflows and tools can push logs and spans traces using any third-party library present in the user’s codebase.

For monitoring the control plane, we offer a comprehensive event-based append-only log accessible through the App UI or APIs.

9. What have been the biggest challenges in scaling Inferable, and how did you overcome them?

Nadeesha Cabral: Being bootstrapped, we faced several key challenges. On the technical side, we’ve stayed intensely focused on getting the technology right while maintaining capital efficiency. This meant engineering our solutions to run effectively on commodity hardware and building scalable infrastructure without excessive cloud costs.

The market itself presents another challenge—it’s still waking up to agents’ potential. We find ourselves not just selling a product but educating the market about the possibilities.

Perhaps most challengingly, we’ve had to work around the limitations of current-generation LLMs, which struggle with complex reasoning in large contexts. This led us to develop engineering solutions and scaffolding to make their behaviour more deterministic and reliable.

10. What personal lessons have you learned about driving innovation in the AI and agent orchestration space?

Nadeesha Cabral: The key lesson has been that hype doesn’t replace good engineering. After all the excitement and buzz settle, the fundamental challenge in AI orchestration comes down to engineering the right abstraction.

John Smith, Co-founder of Inferable.

11. What does success look like for you and Inferable?

Nadeesha Cabral: Our vision of success is to become the platform that delivers the best developer experience for building AI agents with existing codebases—regardless of programming language. We want to be the go-to solution that makes it simple and reliable to add intelligent automation to any system.

12. Looking back, is there a decision or moment in your journey as a founder that you regret or wish you had handled differently?

Nadeesha Cabral: We definitely should have open-sourced much earlier. Open-sourcing earlier would have allowed us to capitalize on community engagement from the start. We’ve come to realize that no one has any real lasting moat in AI or software, for that matter. If someone can build on what we do and surpass us in execution, the world deserves to benefit from that.

We also initially overestimated the pace of model development and scaling advantages that models could achieve. Particularly, we wanted reasoning-capable models to get better and more cost-effective faster than they eventually did. It was a gamble that didn’t pay off. We’ve learned it’s somewhat dangerous to tie ourselves to one model, and our longer-term thinking is that we’d improve upon open-source models.

We’ve been feeling more positive about this direction since the introduction of the DeepSeek R1.

Editor’s Note

Nadeesha Cabral knows firsthand that AI adoption isn’t just about technology—it’s about making it work within real constraints. Inferable isn’t just another agent framework; it’s a practical, developer-first solution designed to integrate AI into complex systems without breaking what already works.