A study predicts that bad actors will use AI daily by mid-2024 to spread toxic content into mainstream online communities, potentially impacting elections. Credit: SciTechDaily.com

A study forecasts that by mid-2024, bad actors are expected to increasingly utilize AI in their daily activities. The research, conducted by Neil F. Johnson and his team, involves an exploration of online communities associated with hatred. Their methodology includes searching for terminology listed in the Anti-Defamation League Hate Symbols Database, as well as identifying groups flagged by the Southern Poverty Law Center.

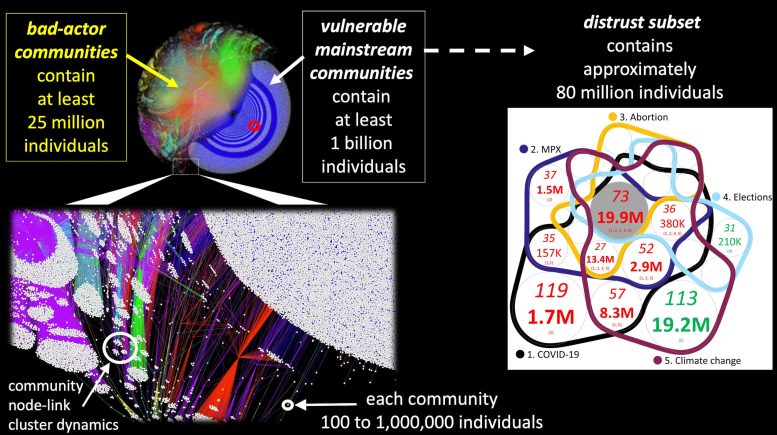

From an initial list of “bad-actor” communities found using these terms, the authors assess communities linked to by the bad-actor communities. The authors repeat this procedure to generate a network map of bad-actor communities—and the more mainstream online groups they link to.

Mainstream Communities Categorized as “Distrust Subset”

Some mainstream communities are categorized as belonging to a “distrust subset” if they host significant discussion of COVID-19, MPX, abortion, elections, or climate change. Using the resulting map of the current online bad-actor “battlefield,” which includes more than 1 billion individuals, the authors project how AI may be used by these bad actors.

The bad-actor–vulnerable-mainstream ecosystem (left panel). It comprises interlinked bad-actor communities (colored nodes) and vulnerable mainstream communities (white nodes, which are communities to which bad-actor communities have formed a direct link). This empirical network is shown using the ForceAtlas2 layout algorithm, which is spontaneous, hence sets of communities (nodes) appear closer together when they share more links. Different colors correspond to different platforms. Small red ring shows 2023 Texas shooter’s YouTube community as illustration. Right panel shows Venn diagram of the topics discussed within the distrust subset. Each circle denotes a category of communities that discuss a specific set of topics, listed at bottom. The medium size number is the number of communities discussing that specific set of topics, and the largest number is the corresponding number of individuals, e.g. gray circle shows that 19.9M individuals (73 communities) discuss all 5 topics. Number is red if a majority are anti-vaccination; green if majority is neutral on vaccines. Only regions with > 3% of total communities are labeled. Anti-vaccination dominates. Overall, this figure shows how bad-actor-AI could quickly achieve global reach and could also grow rapidly by drawing in communities with existing distrust. Credit: Johnson et al.

The authors predict that bad actors will increasingly use AI to continuously push toxic content onto mainstream communities using early iterations of AI tools, as these programs have fewer filters designed to prevent their usage by bad actors and are freely available programs small enough to fit on a laptop.

AI-Powered Attacks Almost Daily by Mid-2024

The authors predict that such bad-actor-AI attacks will occur almost daily by mid-2024—in time to affect U.S. and other global elections. The authors emphasize that as AI is still new, their predictions are necessarily speculative, but hope that their work will nevertheless serve as a starting point for policy discussions about managing the threats of bad-actor-AI.

Reference: “Controlling bad-actor-artificial intelligence activity at scale across online battlefields” by Neil F Johnson, Richard Sear and Lucia Illari, 23 January 2024, PNAS Nexus.

DOI: 10.1093/pnasnexus/pgae004