I’ve already revealed articles about LangChain earlier than, introducing the library and all its capabilities. Now I want to concentrate on a key side, the right way to handle reminiscence in clever chatbots.

Chatbots or brokers additionally want an data storage mechanism, which may take completely different types and carry out completely different capabilities. Implementing a reminiscence system in chatbots not solely helps make them extra intelligent, but additionally extra pure and helpful for customers.

Thankfully, LangChain offers APIs that make it simple for builders to implement reminiscence of their purposes. On this article, we are going to discover this side in additional element.

Use Reminiscence in LangChain

A greatest apply when growing chatbots is to save all of the interactions the chatbot has with the person. It’s because the state of the LLM can change relying on the previous dialog, actually, the LLM to the identical query from 2 customers may even reply otherwise as a result of they’ve a special previous dialog with the chatbot and subsequently it’s in a special state.

So what the chatbot reminiscence creates is nothing greater than an inventory of previous messages, that are fed again to it earlier than a brand new query is requested. In fact, the LLM have restricted context, so it’s a must to be a bit of inventive and select the right way to feed this historical past again to the LLM. The commonest strategies are to return a abstract of the previous messages or return solely the N newest messages which can be most likely essentially the most informative.

Begin with the fundamentals with ChatMessageHistory

That is the principle class that permits us to handle the messages that happen between the chatbot (AI) and the person (Human). This class offers two predominant strategies that are The next.

- add_user_message: permits us so as to add a message into the chatbot’s reminiscence and tag the message as “person”

- add_ai_message: permits us so as to add a message into the chatbot’s reminiscence and tag the message as “AI”

!pip set up langchain

from langchain.reminiscence import ChatMessageHistoryhistorical past = ChatMessageHistory()

historical past.add_user_message("Hello!")

historical past.add_ai_message("Hey, how can I aid you right now?")

#print messages

historical past.messages

This class lets you do numerous issues, however in its easiest use, you possibly can have a look at it as saving numerous messages to an inventory once in a while. Then it’s also possible to evaluate all of the messages you’ve got added just by iterating over the historical past within the following manner.

for message in historical past.messages:

print(message.content material)

Superior Reminiscence with ConversationBufferMemory

The ConversationBufferMemory class behaves considerably just like the ChatMessageHistory class with respect to the message retailer, although it offers intelligent strategies to retrieve previous messages.

For instance, we are able to retrieve previous messages, as an inventory of messages or as one massive string relying on what we’d like. If we need to ask the LLM to make a abstract of the previous dialog it is likely to be helpful to have the previous dialog as one massive string. If we need to do an in depth evaluation of the previous as a substitute, we are able to learn one message at a time by extracting an inventory.

Additionally with the ConversationBufferMemory class, we are able to add messages to the historical past utilizing the add_user_message and add_user_message strategies.

The load_memory_variables methodology alternatively is used to extract previous messages in listing or dictionary type relying on what’s specified, let’s see an instance.

from langchain.reminiscence import ConversationBufferMemoryreminiscence = ConversationBufferMemory()

reminiscence.chat_memory.add_user_message("Hello, how are you?")

reminiscence.chat_memory.add_ai_message("hey,I'm wonderful! How are you?")

memory_variables = reminiscence.load_memory_variables({})

print(memory_variables['history'])

Handle Reminiscence in A number of Conversations

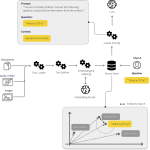

We’ve seen toy examples of the right way to handle reminiscence by saving and retrieving messages. However in a real-world utility you’ll most likely have to handle the reminiscence of a number of conversations. LangChain lets you handle this case as nicely with the usage of what are known as chains.

A sequence is nothing greater than a workflow of varied easy or advanced steps that will let you obtain a sure aim.

For instance, an LLM that appears up a chunk of knowledge on Wikipedia as a result of it doesn’t know the right way to reply a sure query is a sequence.

To deal with numerous conversations, it is sufficient to affiliate a ConversationBufferMemory with every chain that’s created with an instantiation of the ConversationChain class.

This fashion when the predict methodology of the mannequin is known as, all of the steps of the chain are run so the mannequin will learn the previous messages of the dialog.

Let’s have a look at a easy instance.

from langchain.llms import OpenAI

from langchain.chains import ConversationChain

from langchain.reminiscence import ConversationBufferMemoryreminiscence = ConversationBufferMemory()

dialog = ConversationChain(llm= OpenAI(), reminiscence=reminiscence)

dialog.predict(enter="Hey! how are you?")

In conclusion, reminiscence is a crucial part of a chatbot, and LangChain offers a number of frameworks and instruments to handle reminiscence successfully. By the usage of courses akin to ChatMessageHistory and ConversationBufferMemory, you possibly can seize and retailer person interactions with the AI, and use this data to information future AI responses. I hope this data helps you construct smarter and extra succesful chatbots!

Within the subsequent article, I’ll present you the right way to use LangChain instruments.

For those who discovered this text helpful comply with me right here on Medium! 😉

Marcello Politi